What to expect from this blog post?

In this blog post, I will discuss a little bit about Docker before diving deep into details instructions on using Docker in your Devops workflow. I will explain about running Docker containers, Docker networking, Docker volumes, and building Docker images with Dockerfile, and pushing them to container registry.

Why Docker?

If you go back 20–30 years, you had a hardware and installed operating system (Kernel and UI) on top of that. To run an application, we had to compile the code and sort all application dependencies. If we needed another application or more capacity to accommodate application workload hikes, we had to purchase new hardware, do installation, and configuration.

Virtualization added one additional layer between the hardware and operating system called hypervisor. It allowed users to run multiple isolated applications to run virtual machines with their OS.

We still had to install software and set up dependencies on every VM. Applications were not portable. They worked on a few machines rather than on others.

Interesting read:

- Using docker init to write Dockerfile and docker-compose configs

- Deploy a dockerized Flask app on AWS Using Github Action

- Run multi-container applications with Docker Compose

What is docker?

In simple words, docker is a way to package softwares so they can run on any machines (Windows, mac and linux)

Docker revolutionized the way we build software by making microservice-based application development possible.

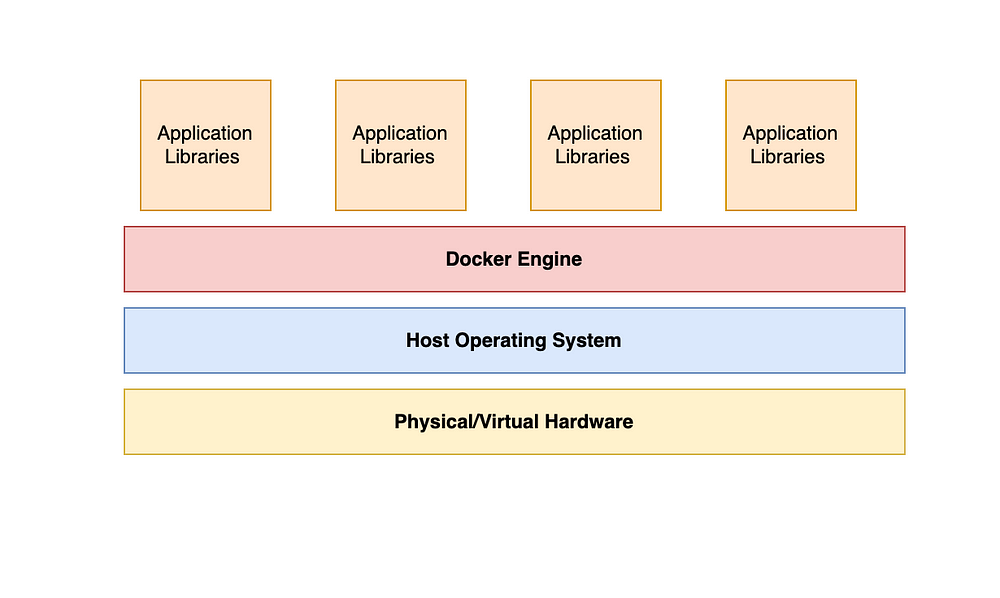

How does Docker work?

The Docker engine runs on top of the host operating system. Docker Engine includes a server process (dockerd) that manages docker containers on the host system. Docker containers are designed to isolate applications and their dependencies, ensuring that they can run consistently across different environments.

To work with Docker, we need to understand three concepts, Dockerfile, Docker image, and Docker container.

What is a Docker file (Dockerfile)?

Docker file is a blueprint to build a docker image.

What is a Docker image?

Docker image is a template for running docker containers.

Docker images contain all the dependencies needed to execute code inside a container.

What is a Docker container?

Container is just a running process.

One docker image can be used to spun up multiple processes many times, at many places. They can be easily shared with anyone, and they can use it to run the process.

Getting Started with Docker

Prerequisite

Docker must be installed in your local machine/cloud VM.

Linux:

If you are running Linux on a local laptop, virtual box, or cloud VM then you can use package managers to install Docker. Follow the instructions in this blog post.

For Mac and Windows:

You can install Docker Desktop which will allow you to run Docker on your local machine.

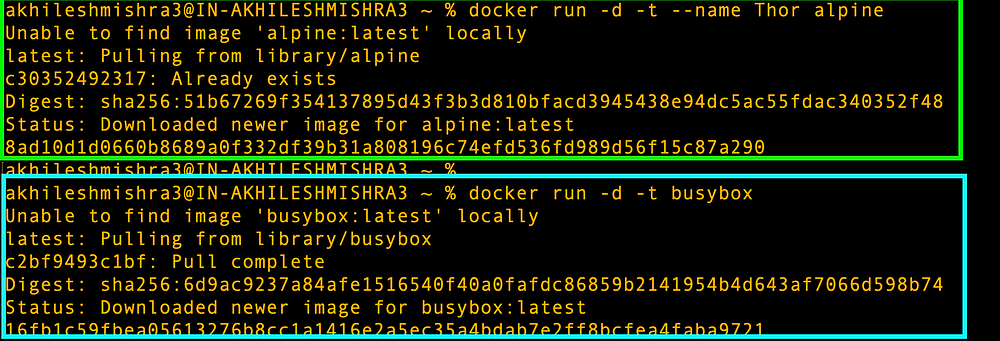

Getting started with Docker is a piece of cake. Just run the below command

docker run -d -t --name Thor alpine

docker run -d -t busyboxThese will spin up 2 containers from the docker images alpine and Busybox. Both are the minimalist, public Linux docker images stored on Docker Hub.

-dwill run the container in detach mode(In the background)-twill attach a tty terminal to it.--namewill give a name to the container. If you do not provide it, the container will get a random name

Note: Since this is the first time we ran a docker run with the above-mentioned images, docker has to download(pull) the images on the local machine(from the docker hub).

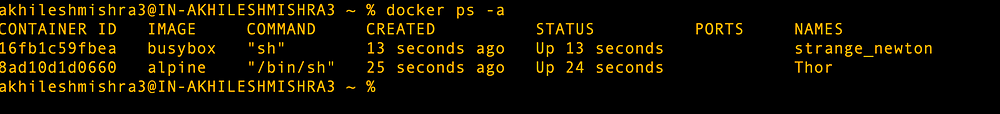

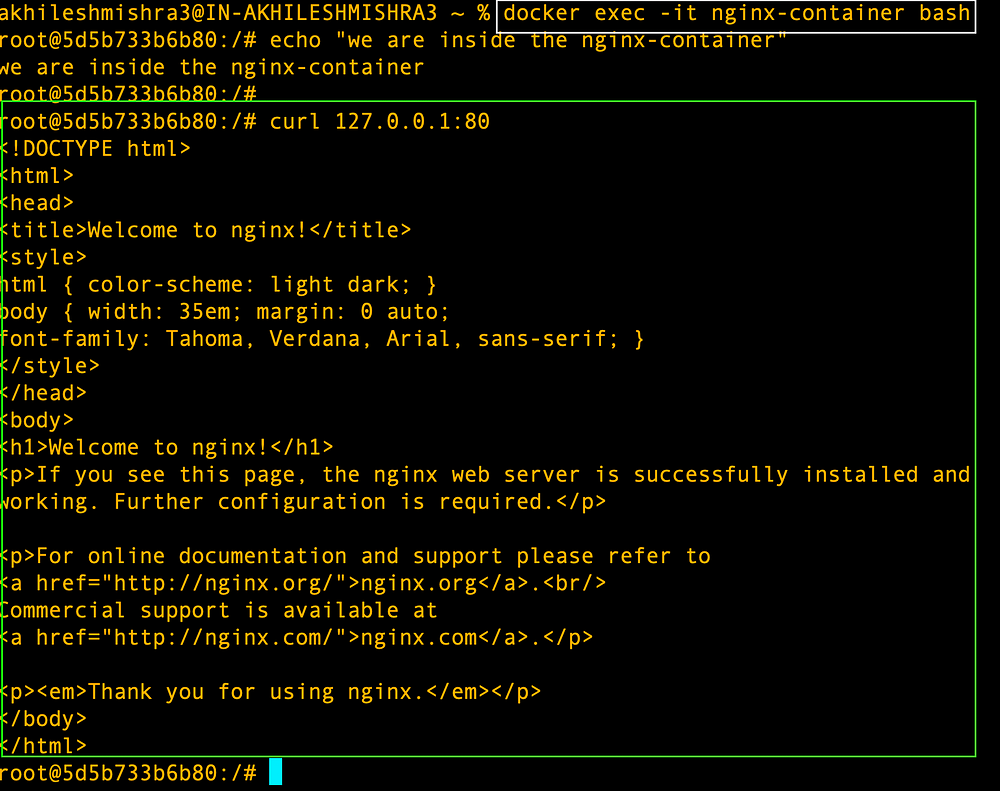

List the containers running/stopped in your local machine

docker ps # for running containers

docker ps -a # for listing all containers

Listing docker images on the local machine

docker image ls

You can see the size of the images used for running Linux-based docker containers. It’s small compared to normal Linux-based machines such as Ubuntu, amazon-Linux, centos, etc.

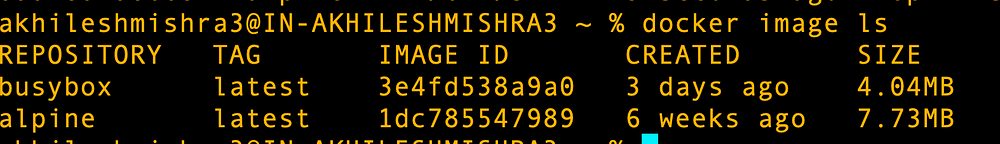

We can interact with running containers either by passing a command or opening an interactive session with it.# docker exec -it <container id > <shell>

- docker exec will allow you to get inside the running docker container.

--itwill open an interactive session with the docker- Shell can be sh, bash, zsh, etc.

Let’s run some commands to get the container info

docker exec -t Thor ls ; ps

# -t to open tty session

# Thor is the container name

# ls ; ps will run 2 commands, ls and ps

docker exec -t 8ad10d1d0660 free -m

# 8ad10d1d0660 is the container id and free -m is the command to

# check the memory usage

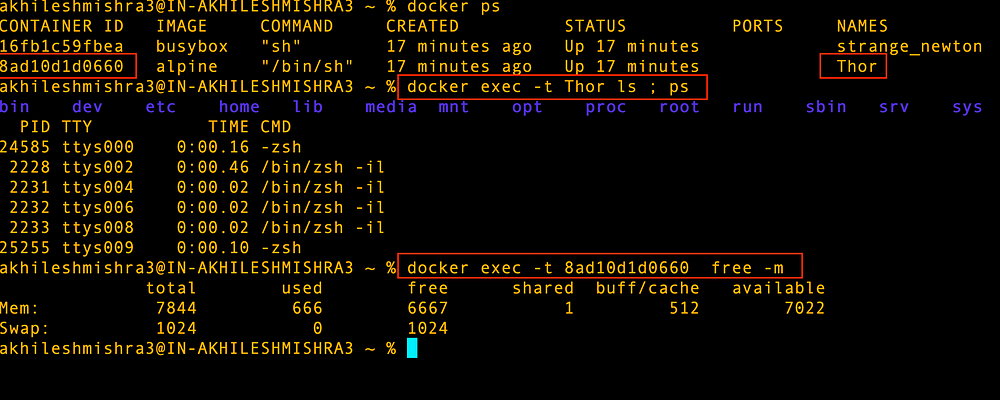

Now we will open an interactive shell session with a container using the -it option

docker exec -it 16fb1c59fbea sh

# you can type "exit" to come out of the session

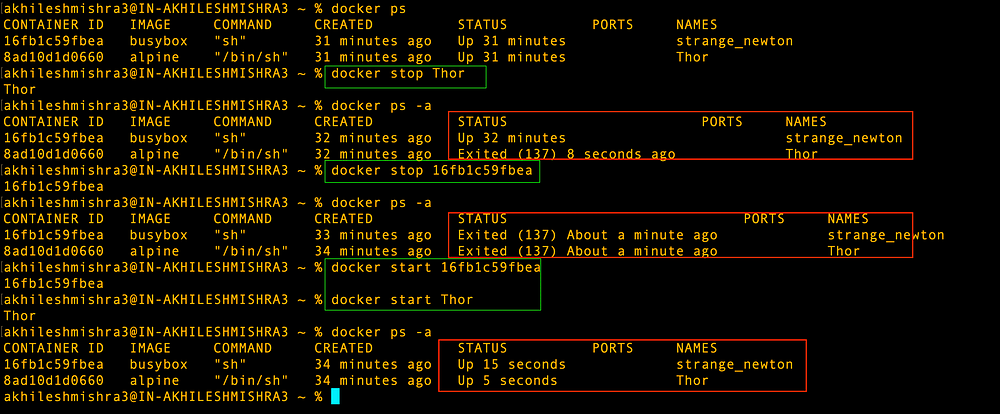

Starting, stopping, and deleting a container

# to stop a running container

docker stop <Container name or ID>

# to start a stopped container

docker start <Container name or ID>

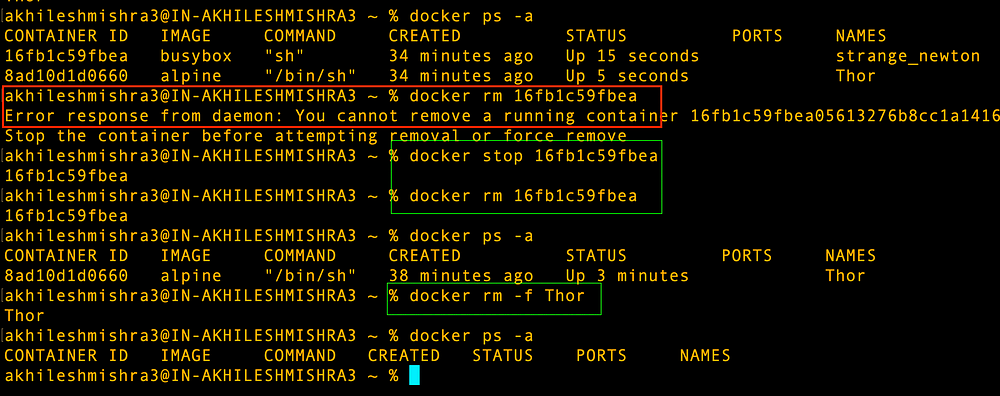

# To kill a running container, you either stop it before using rm

# command to remove it,

# docker stop <container name or ID>

# docker rm <container name or ID>

docker stop 16fb1c59fbea

docker rm 16fb1c59fbea# or use -f flag to force delete the container

docker rm -f Thor

Building docker image

Docker Networking

Docker provides multiple kinds of network

1. Default bridge

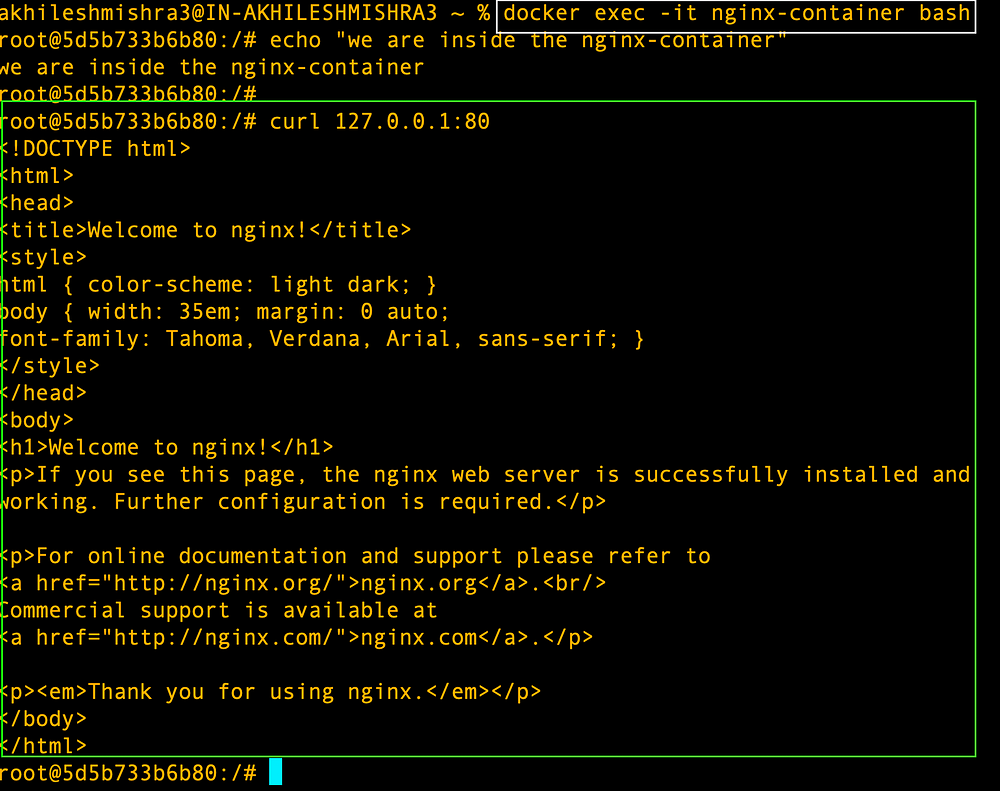

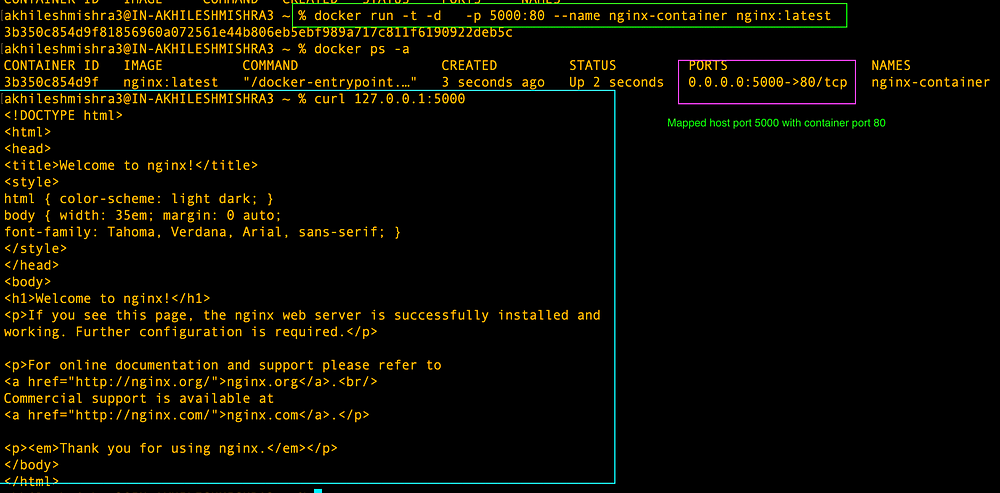

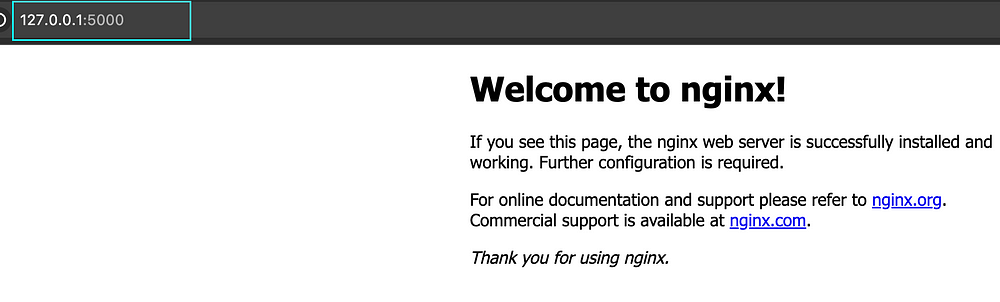

Suppose you are running one nginx container with an nginx image from Dockerhub, and you want to access the web server.

From the screenshot, you can see that the Nginx web server is running on port 80. If we log into the NGINX container and run curl 127.0.0.1:80 , it will return the HTML response from the web server.

- 127.0.0.1 is a loopback address, it always refers to the current device(localhost)

As you see above, the web server inside the container did what we expected it to do.

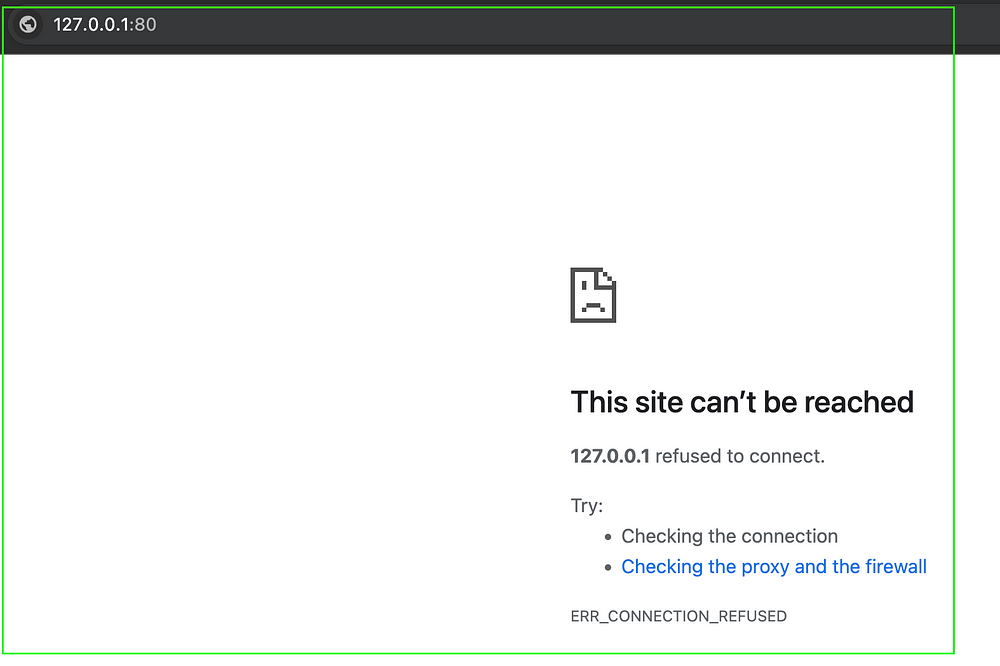

Let’s try to reach the web server from the host machine (your laptop/VM)

So we could not connect to the nginx web server running on the container.

Deep dive into networks

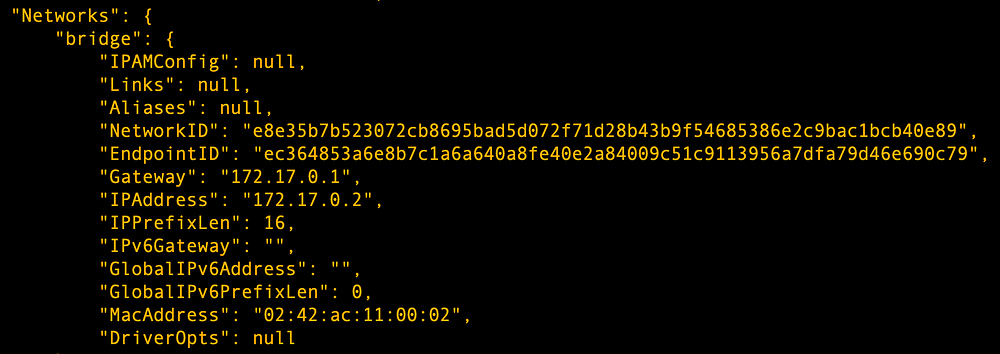

If you inspect the docker container by running the docker inspect command and scrolling to the bottom. You will see docker using bridge network, and more details about the network.

docker inspect nginx-container

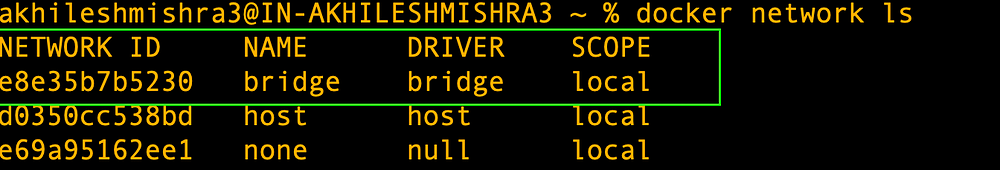

docker network ls

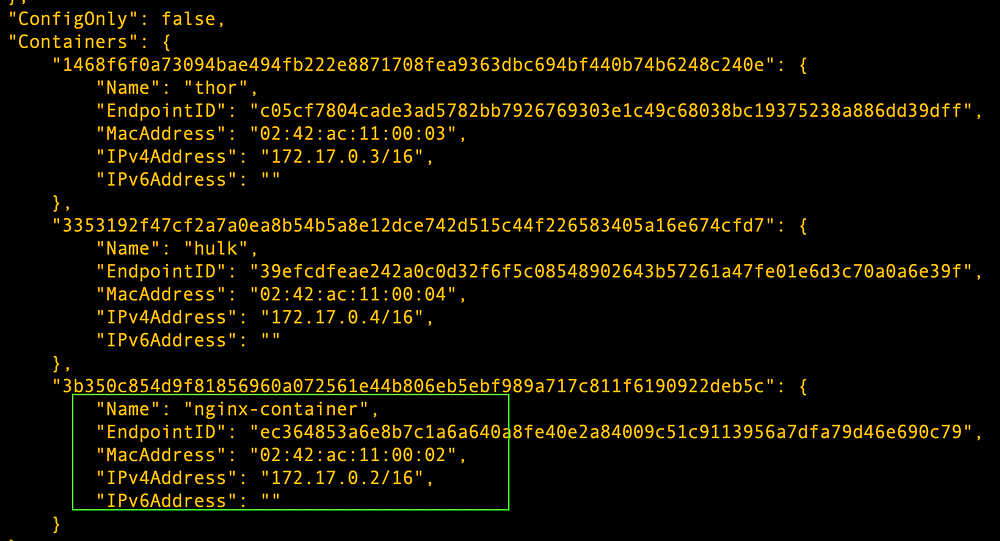

If you inspect the network, you will find the containers using this default bridge network.

docker network inspect bridge

By default docker will use

default bridgenetwork, and annoying thing aboutdefault bridgenetwork is that you cannot access the services offered by docker containers by default. You have to manually expose the services by forwarding the ports.

Port forwarding

docker run -d -p <host port>:<container port> --name <container nae> Image#Port forwadring -> forward port 80 of container to port 5000 on the host machine

docker run -t -d -p 5000:80 --name nginx-container nginx:latest

Docker does not want us to use the default network, instead, they want us to create it.

Let’s create 2 containers using busybox image. Once the containers are running, we will try to ping each other from inside the container.

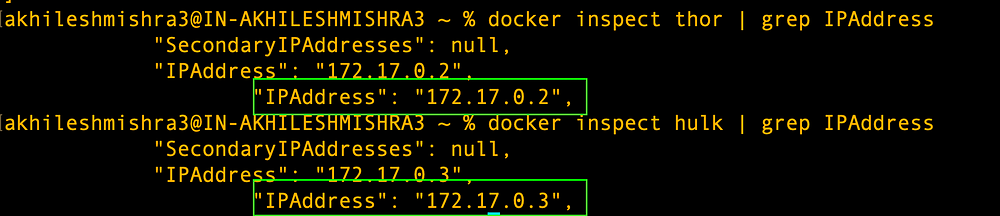

Create 2 containers and use docker inspect them to get each other’s IP addresses.

As you can see the IP addresses of both containers are in the same network(default bridge network)

let’s ping one another with name and IP address

If you look at the above screenshot, you can see that both containers can talk to each other but not by name.

Default bridge network does not privide internal dns name resolution. Also they will use the host network so they will not be isolated

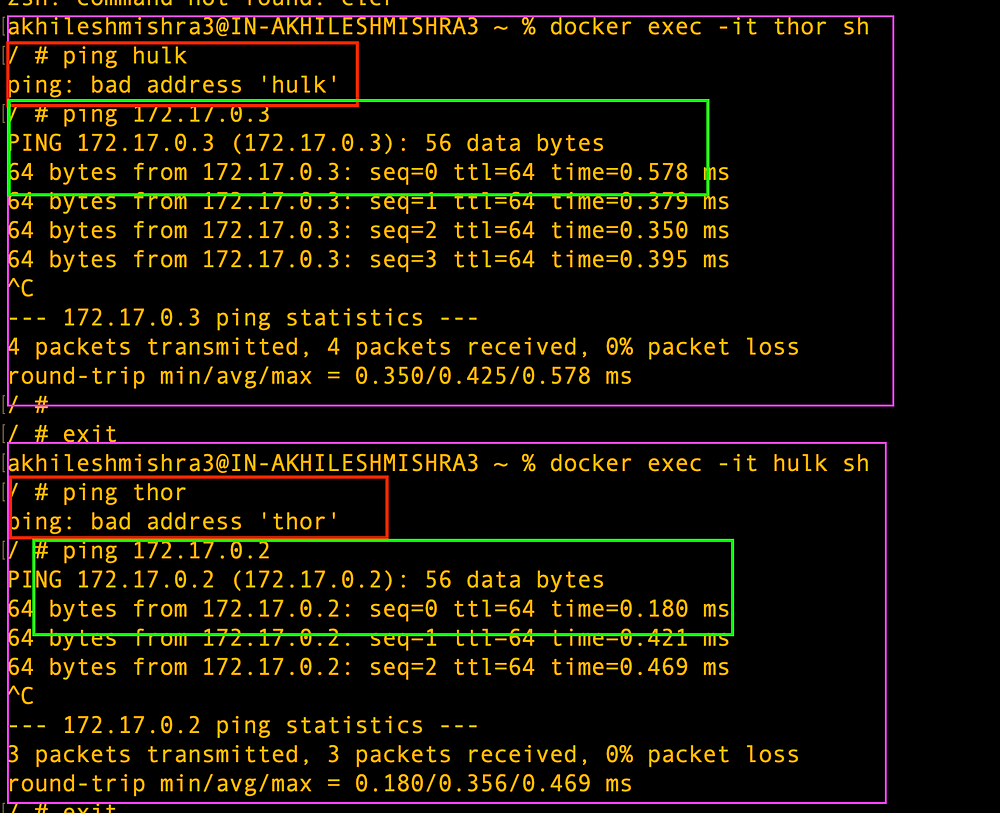

2. User-defined bridge network

Create network

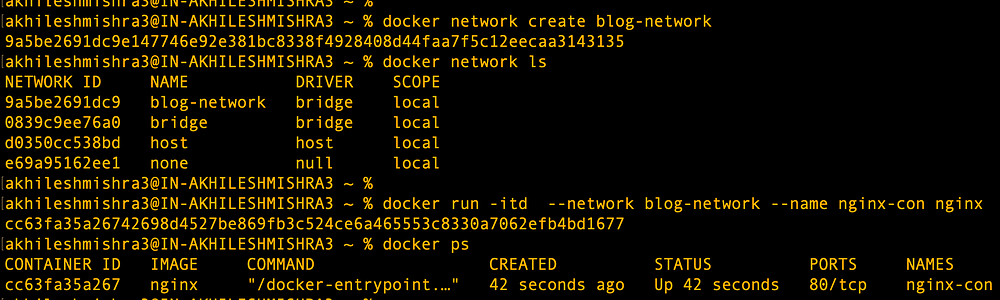

docker network create blog-networkCreate an nginx container with the name nginx-con using this new network

kdocker run -itd --network blog-network --name nginx-con nginx

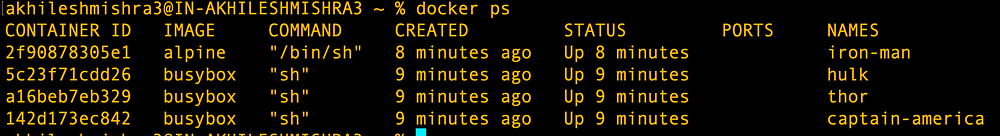

docker ps

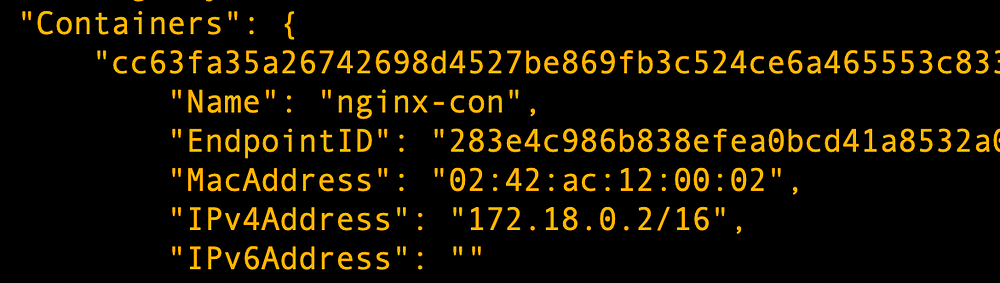

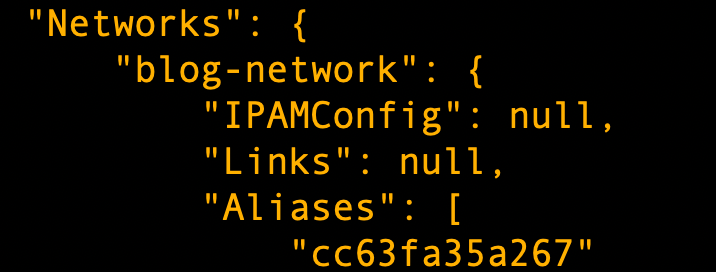

Let’s inspect the docker container and network

docker network inspect blog-network

docker inspect nginx-con

Now if we try to access the nginx web server running in the container nginx-con from our host machine on port 80, it will not work. We still have to do the port forwarding to the host port to access the web server running on the container.

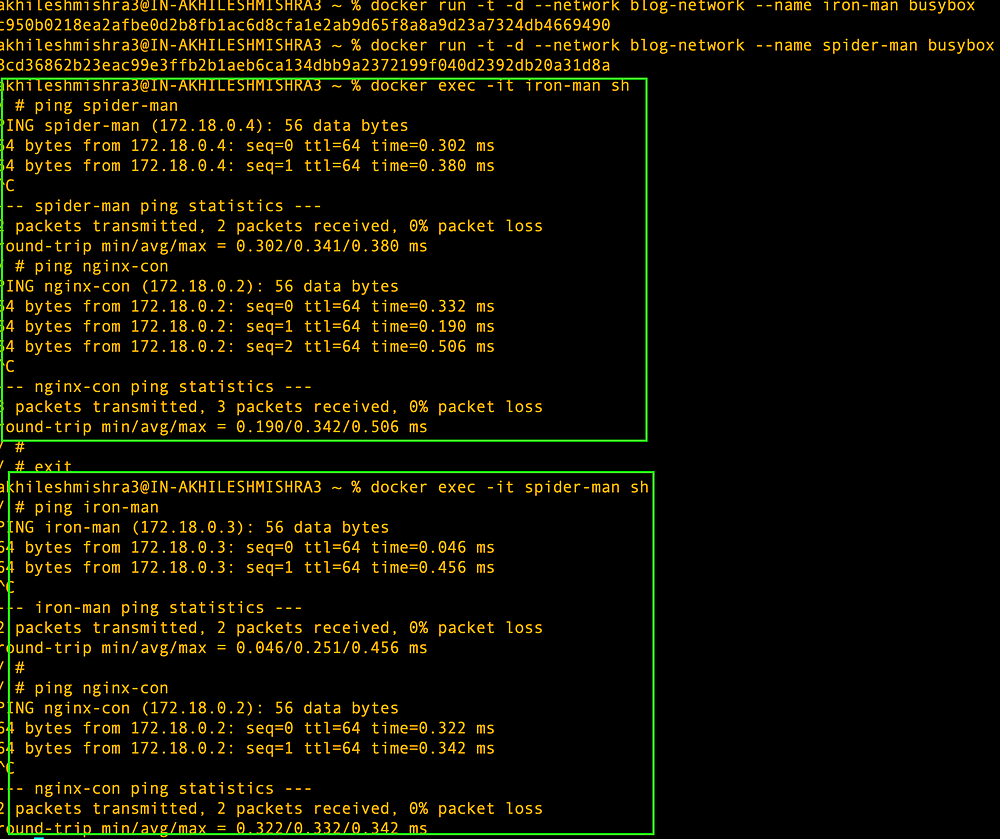

But it got one thing sorted — name resolution. Now if we ping containers in a user-defined bridge network, it should work.

Let’s create one busybox container and try to ping the nginx-con container.

User defined network will created a new network which will be different from the host network. It will provide isolation to containers running in this network. It will also provide name resolution for all containers within network.

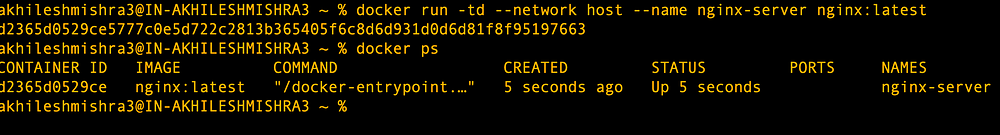

3. Host network

docker run -td --network host --name nginx-server nginx:latest

docker ps

This will run the nginx container in the host network itself.

If we try to reach the Nginx server from the local host, it should work.

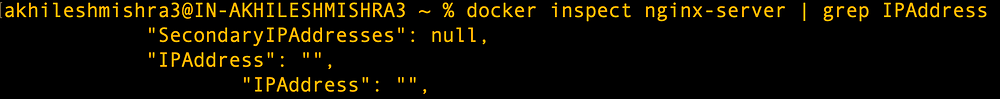

If we try to see the IP address of the container

docker inspect nginx-server | grep IPAddress

What? It does not have its IP address, it used the IP address of our host machine.

I can keep going on with other types of networks but this blog is getting way too long.

Docker Volumes

Docker isolates all content, code, and data in a container from your local filesystem. This means, that when you delete a container within Docker Desktop, all the content within it is deleted.

Sometimes you may want to persist data that a container generated. This is when you can use volumes.

Bind mount

When you use a bind mount, a file or directory on the host machine is mounted into a container.

Docker volumes

A volume is a location in your local filesystem, managed by Docker.

Volume doesn’t increase the size of the containers using it, and the volume’s contents exist outside the lifecycle of a given container.

How to use volumes in docker

There are 2 ways of using volumes with docker, --mount and -v (or --volume ). -v syntax combines all the options in one field, while the --mount syntax separates them

-v or --volume: Consists of three fields, separated by colon characters (:).

- The first field is the path to the file or directory on the host machine in case of bind mounts, or the name of the volume.

- The second field is the path where the file or directory is mounted in the container.

- The third field is optional. It is a comma-separated list of options, such as

ro,z, andZ

--mount: Consists of multiple key-value pairs, separated by commas.

- The

typeof the mount, which can bebind,volume, ortmpfs - The

sourceof the mount. - The

destinationtakes as its value the path where the file or directory is mounted in the container

Example: Docker container with a bind mount

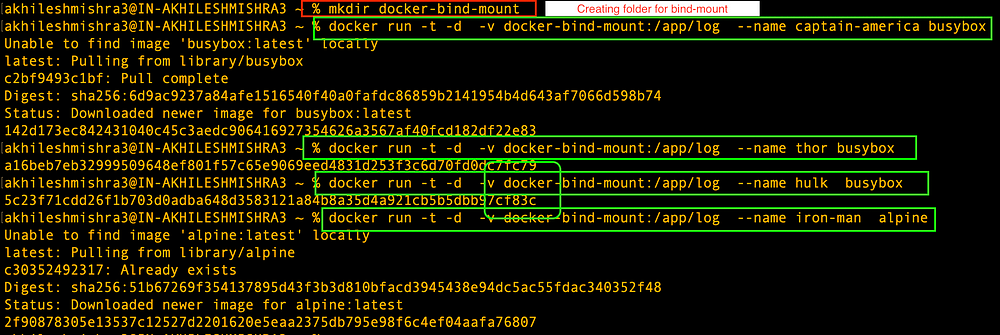

We spun up 4 containers and mounted the same directory/folder from the host machine to all containers.

mkdir docker-bind-mount

docker run -t -d -v docker-bind-mount:/app/log --name captain-america busybox

docker run -t -d -v docker-bind-mount:/app/log --name thor busybox

docker run -t -d -v docker-bind-mount:/app/log --name hulk busybox

docker run -t -d -v docker-bind-mount:/app/log --name iron-man alpine# command with --mount option

docker run -t -d --mount type=bind,source=docker-bind-mount,target=/app/log \

--name captain-america busybox

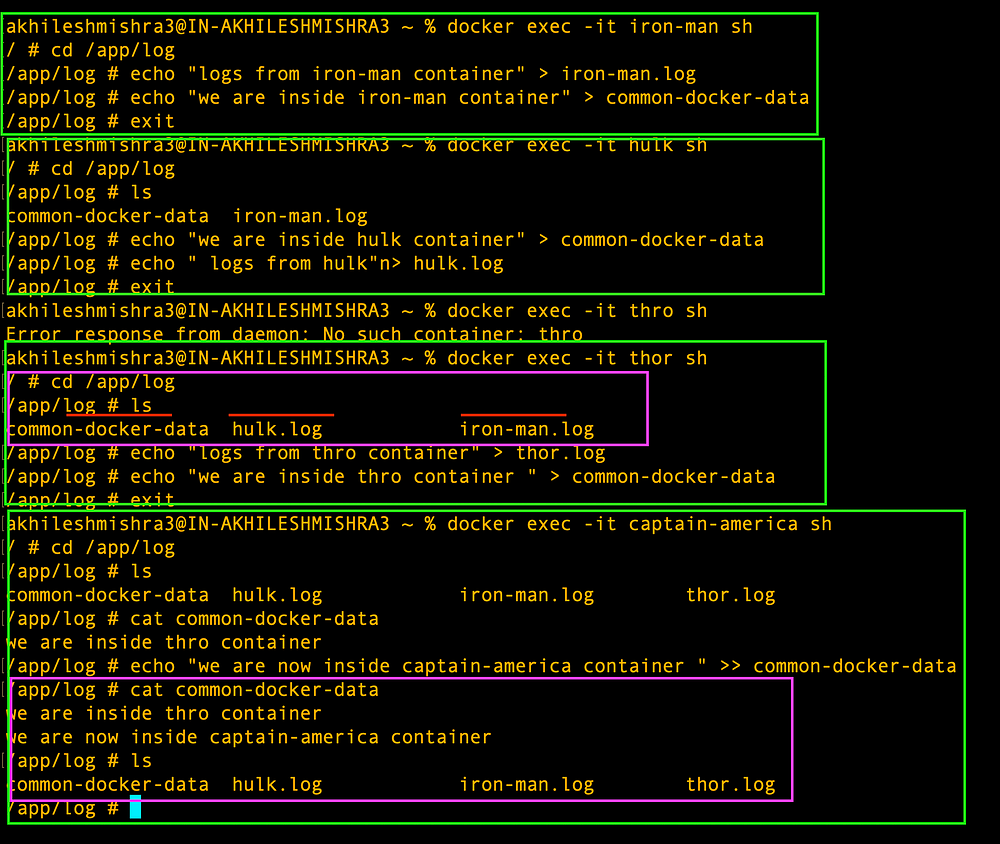

Let’s write some logs inside each container at the path where we have mounted the bind-mounts — /app/log

If you look closely, you will see the files we created in 1st and 2nd containers, are visible in the third container, and the files created in the first 3 containers are visible in the 4th(captain-America) container.

Also, the common-docker-data file contains the content from other containers.

This example demonstrated how we can share the files/logs across multiple containers with ease.

But, here is one catch. If we go to the local host and list the directory that we bind mounted to all containers, you will find nothing.

That means, docker was able to share the data from bind mount with other containers but the data is not visible on the local host.

These files are stored and shared among docker containers in case of bind mounts.

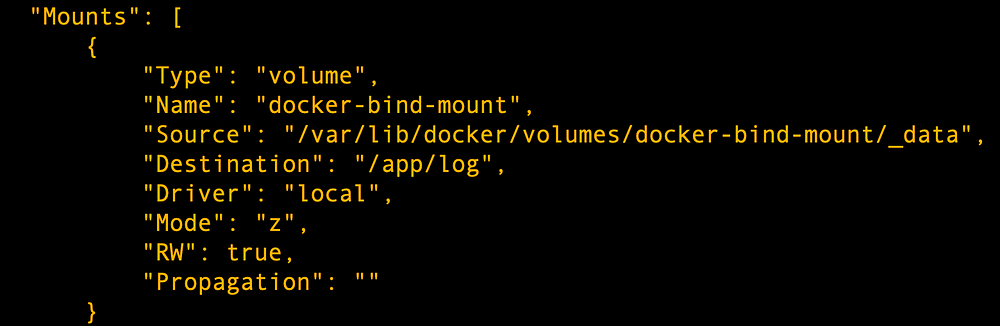

If we inspect one of the docker containers and go to the mount section, we will see the details related to the mounts.

Note: The docker inspect command provides more details about the docker container.

# docker inspect <container-name or container id>

docker inspect hulk

Example: Docker container with volumes

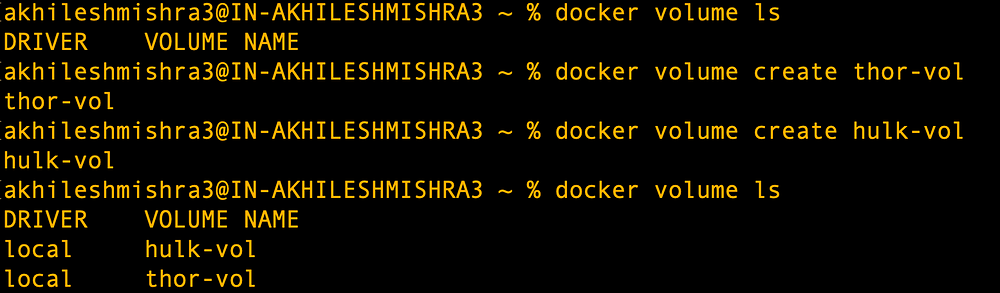

Create the volume

# docker volume create <volume name>

docker volume create thor-vol

docker volume create hulk-vol

# to list docker volume

docker volume ls# docker volume

# Commands:

# create Create a volume

# inspect Display detailed information on one or more volumes

# ls List volumes

# prune Remove unused local volumes

# rm Remove one or more volumes

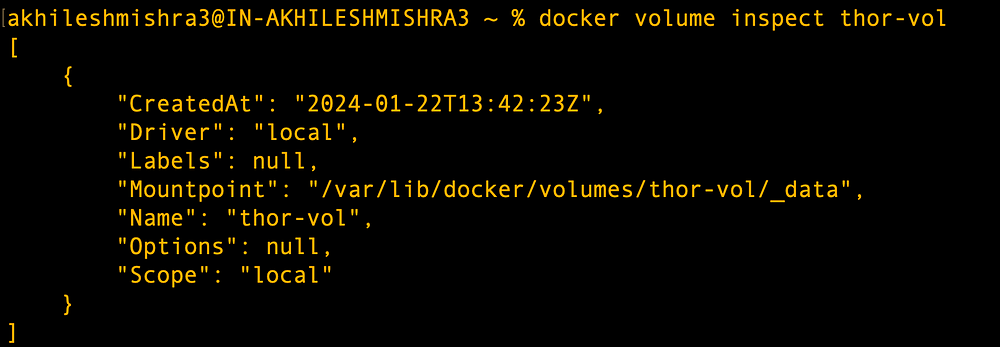

Inspect the volume

docker volume inspect thor-vol

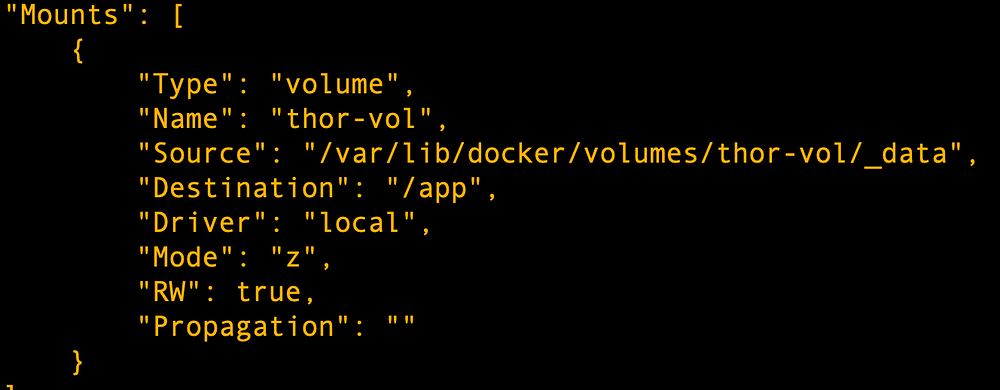

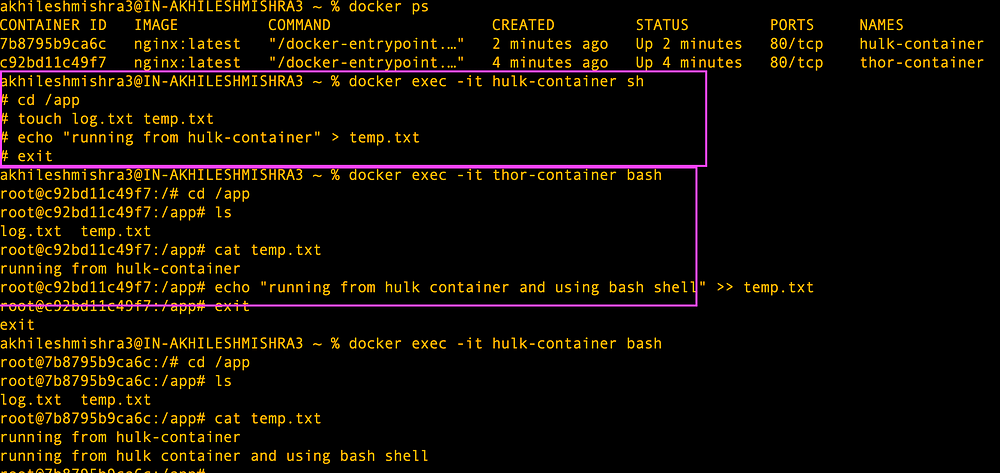

Let’s mount this volume while creating a new container. This time we will use — — mount option

docker run -d \

--name thor-container \

--mount type=volume,source=thor-vol,target=/app \

nginx:latest# type default is volume when you mention a volume as a source

docker run -d \

--name hulk-container \

--mount source=thor-vol,target=/app \

nginx:latest

docker inspect hulk-container

Let’s create some logs in both containers and see if the files/logs are visible to both containers.

As you can see from the above screenshot, we can share data/logs across containers.

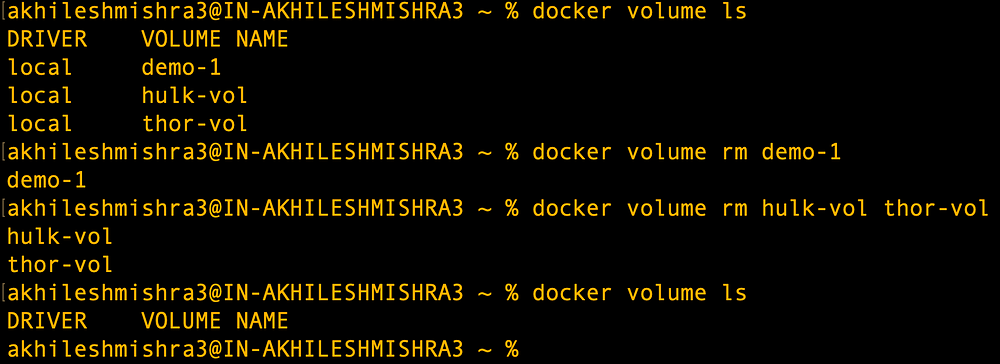

Deleting docker volumes

# To remove a docker volume

# we can delete one or more volumes in single command

docker volume rm <volume-names>

Building our docker image

Now that we have gone through basic docker concepts, let’s do some hands-on examples.

What are we going to do here?

We will build a docker image with a basic flask application inside it. We will push the build docker image to the docker hub.

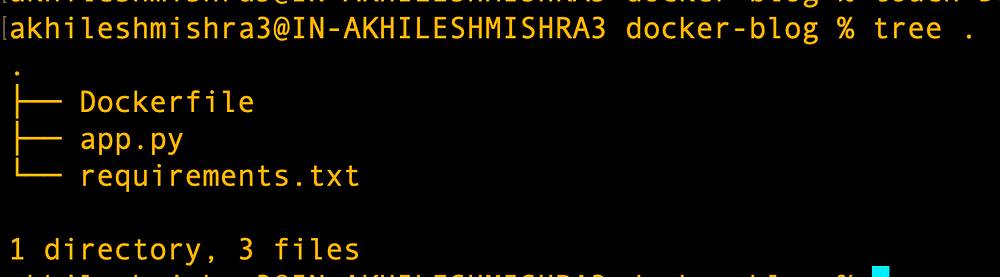

let's create one folder and create 3 files, app.py, requirements.txt, and Dockerfile.

touch Dockerfile app.py requirements.txt

Paste the below code to each of the files.

#app.py

from flask import Flask

app = Flask(__name__)

@app.route('/')

def hello_docker():

return 'Hello, Docker!'

if __name__ == '__main__':

app.run(debug=True, host='0.0.0.0')# requirements.txt

FlaskNow we will create a Dockerfile to build the Docker image

#Dockerfile

# Use an official Python runtime as a parent image

FROM python:3.11

# Copy the python dependency file into the container at /app

COPY requirements.txt /app

# Install any needed packages specified in requirements.txt

RUN pip install --no-cache-dir -r requirements.txt

# Copy the flask app file into the container at /app

COPY app.py /app

# Make port 5000 available to the world outside this container

EXPOSE 5000

# Run app.py when the container launches

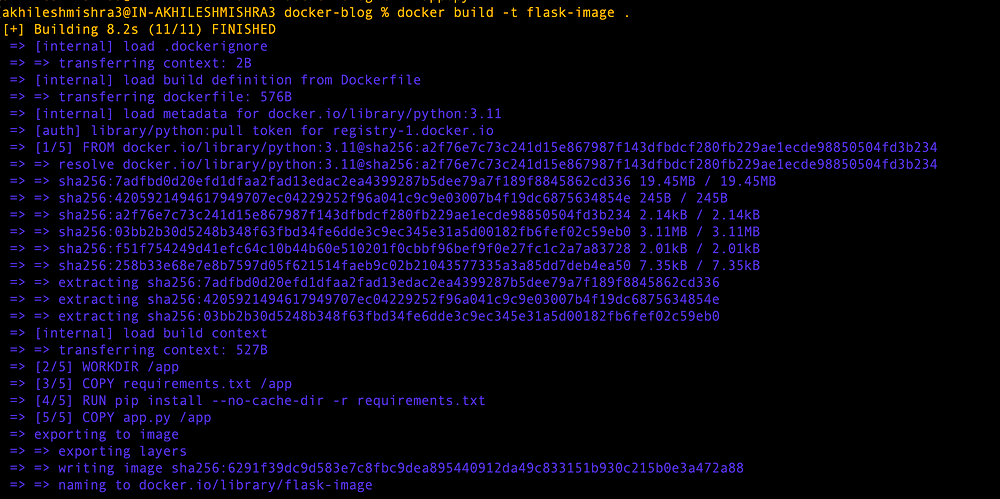

CMD ["python", "app.py"]Building docker image

# docker build -t <image-name> <path to Dockerfile>

docker build -t flask-image .

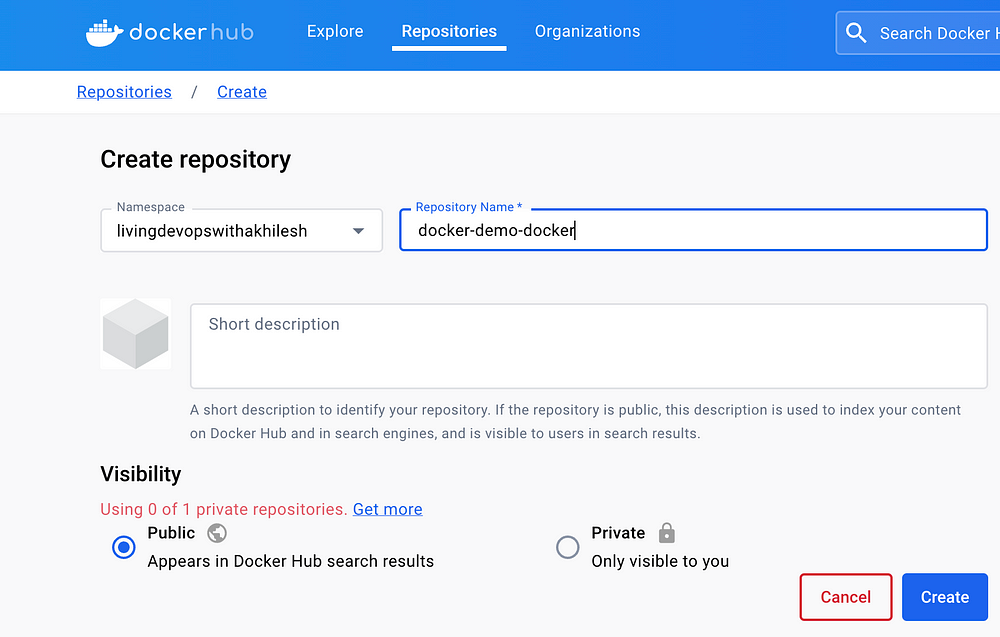

Let’s create a Docker Hub repository. Create an account if you haven’t already — I created with the name livingdevopswithakhilesh

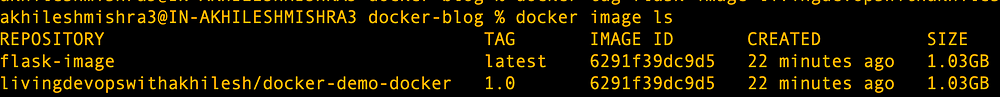

Tag the image

# docker tag <local image> <docker hub username>/<repositoty name>:<tag>

docker tag flask-image livingdevopswithakhilesh/docker-demo-docker:1.0

Push the image to the docker hub. Before you can push the image, run docker login

docker login

# docker push <tagged-image>

docker push livingdevopswithakhilesh/docker-demo-docker:1.0

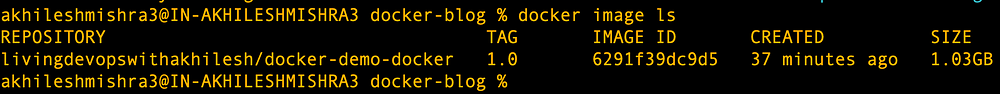

We delete the local images, pull the image from Docker Hub, and use that to run a container.

docker pull livingdevopswithakhilesh/docker-demo-docker:1.0

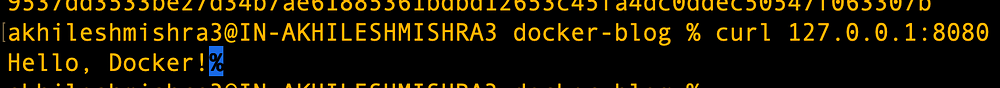

docker run -td -p 8080:5000 --name flask livingdevopswithakhilesh/docker-demo-docker:1.0

That is all for this blog, see you on the next one. Follow me for more useful content like this.

About me

I am Akhilesh Mishra, a self-taught Devops engineer with 11+ years working on private and public cloud (GCP & AWS)technologies.

I also mentor DevOps aspirants in their journey to devops by providing guided learning and Mentorship.

Mentorship with Akhilesh on Topmate

Longterm mentorship with Akhilesh on Preplaced

Connect with me on Linkedin: https://www.linkedin.com/in/akhilesh-mishra-0ab886124/