Accessing Amazon S3 privately enhances security, improves performance, reduces costs, ensures compliance with regulatory requirements, simplifies network architecture, and provides reliable and managed connectivity. This approach is particularly beneficial for workloads that require secure, high-performance, and cost-effective access to S3.

Accessing S3 from a Private EC2 Instance Without Going through the Internet with VPC Gateway Endpoint – Terraform Implementation

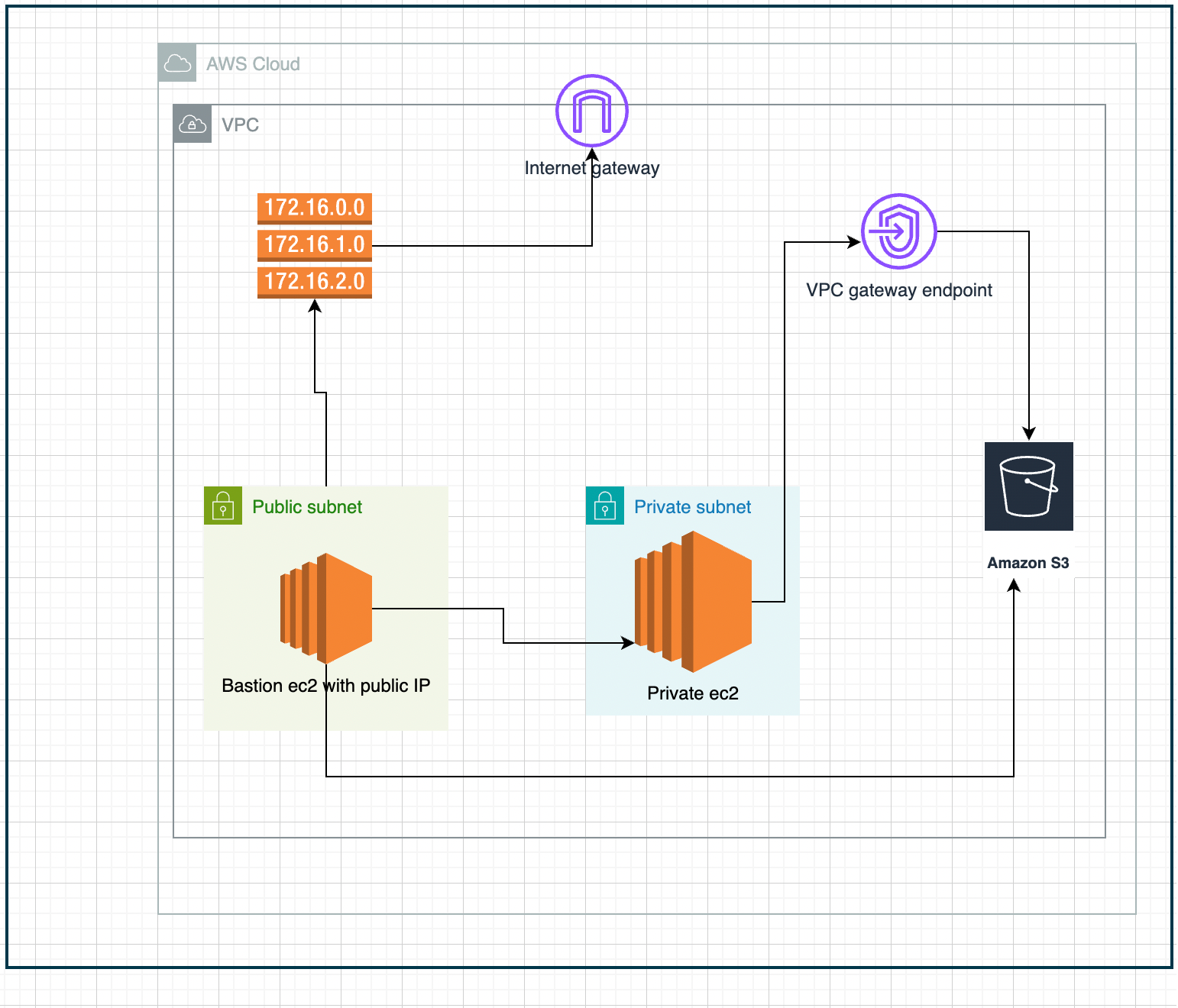

To connect to an S3 bucket privately from an instance in a private subnet, you can use a VPC gateway endpoint for S3. This allows the traffic between your VPC and S3 to remain within the AWS network, avoiding exposure to the public internet.

As an added benefit, AWS does not charge for the VPC gateway endpoint, making it a cost-effective solution for secure S3 access.

What we will be doing in this blog?

In this blog post, we’ll explore how to configure a VPC endpoint to enable an EC2 instance in a private subnet to securely access S3, ensuring that your sensitive data remains protected while still allowing essential functionality.

If you look at the above architectural diagram, you will see that we are trying to access the S3 bucket (AWS Object storage service) from private and public EC2 instances. Both of these scenarios are common in real-world applications.

AWS infra Setup

- One EC2 instance with public IP that has routes configured to reach the internet( via Internet Gateway).

- One EC2 instance without public IP, cannot access the internet.

- One S3 bucket.

- One VPC gateway endpoint that will allow private access to the S3 bucket from VPC.

In this blog post, I will use Terraform to provision an EC2 bastion host, a private EC2 instance, S3 buckets, and an IAM role to access S3 buckets. I will also show you how to use the VPC gateway endpoint to access S3 without going to the Internet.

Implementation

Pre-requisite:

- Create an SSH key pair that we will use to access the EC2 instance (Manual step).

- Create a CLI user and generate access keys and secrets (Manual step).

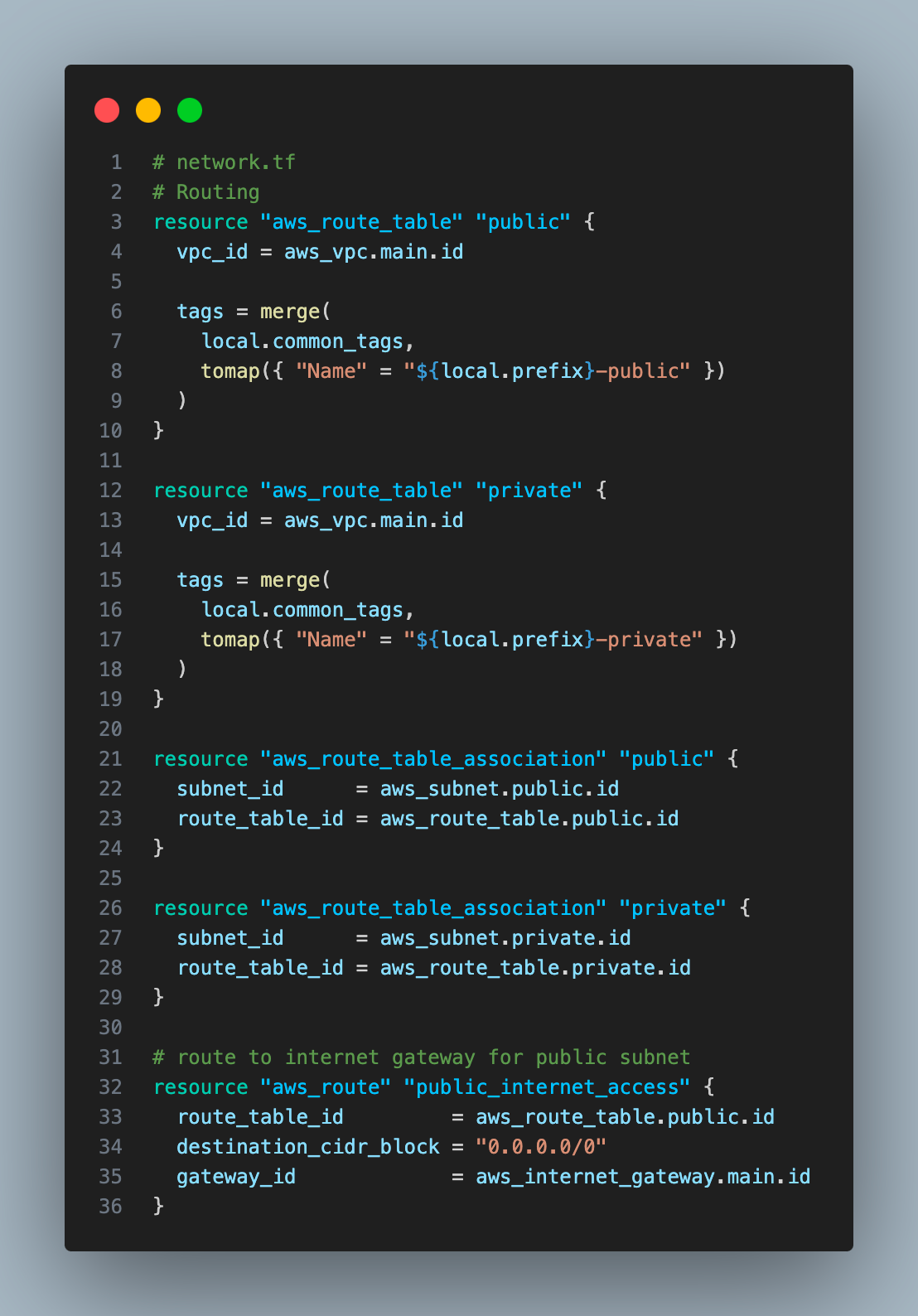

Create VPC, private, and public subnets, and routing tables associated with both subnets.

This part is the same as in the last blog post where I explained how all networking component fits together.

I will keep all the code used in this blog post on my Github repo, use the link to access the code.

map_public_ip_on_launch = true will make sure all ec2 instances created in the public subnet will have a public IP.

It will create a route for the public subnet to the internet gateway, allowing resources in the public subnet to talk to the internet.

Create a bastion EC2 instance on a public subnet and a storage bucket

Before you create an EC2 instance, we will do a few things

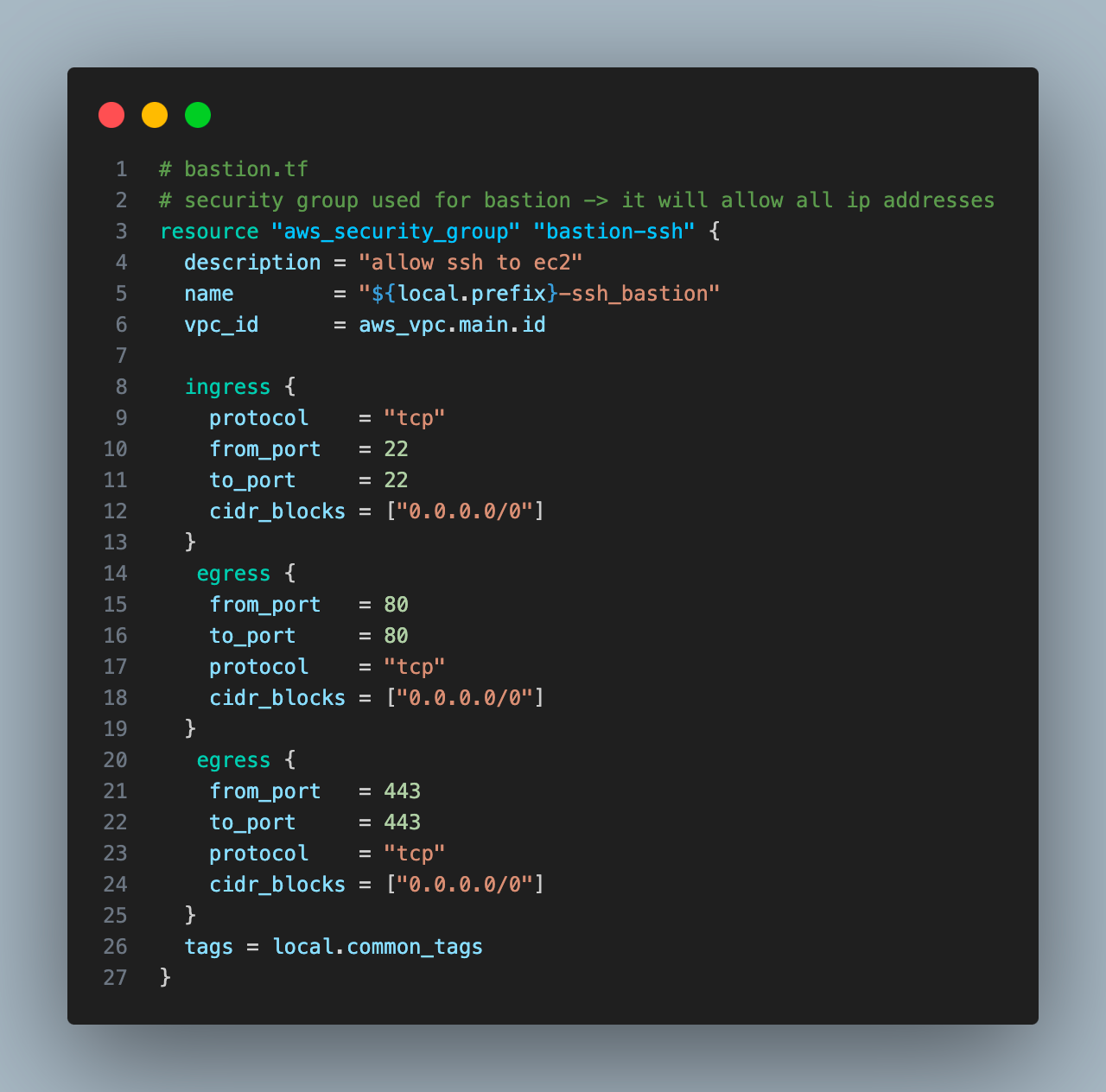

1. Security group with ingress SSH and egress HTTP and HTTPS

SSH port to enable SSH connection to bastion instance. HTTP and HTTPS port to enable ec2 instance talk to s3 buckets via HTTP/HTTPS endpoints.

Security Group acts as a virtual firewall, controlling inbound and outbound traffic for instances. It is stateful, if you allow inbound traffic from a specific IP address, the corresponding outbound response traffic is automatically permitted.

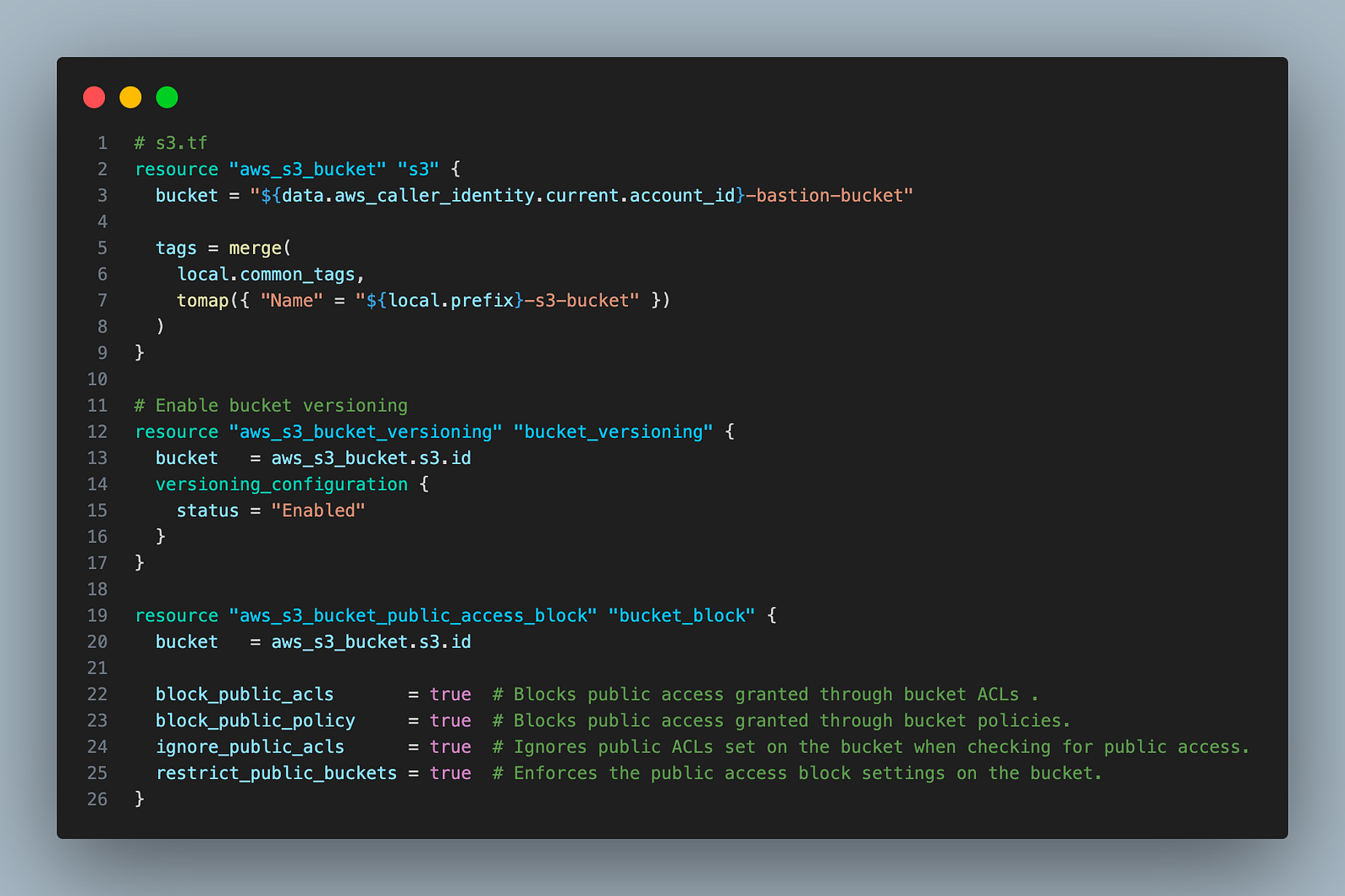

2. Create an S3 bucket that we will access from the ec2 instance

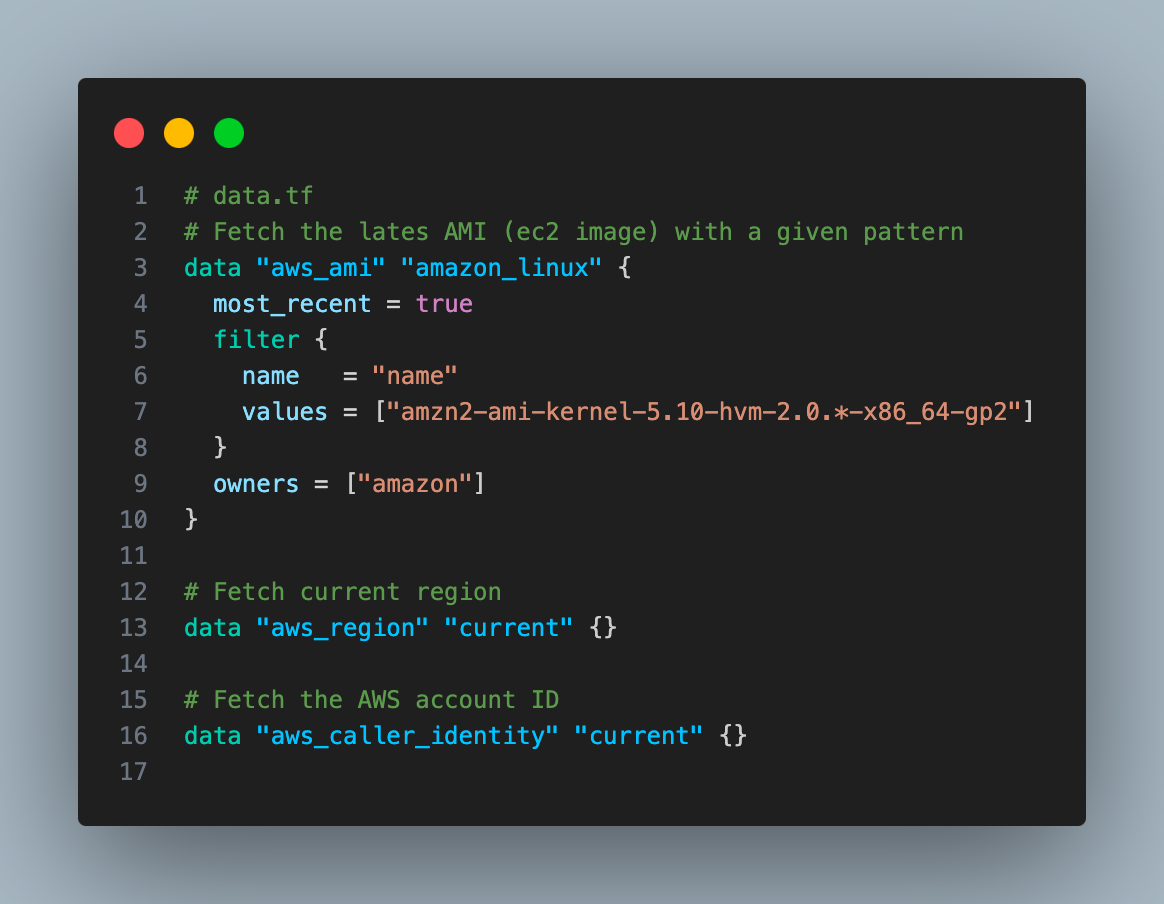

data.aws_caller_identity.current.account_id gives the account ID of the AWS account where you are deploying the Terraform code. We will use it to give the bucket a universally unique name, which is required to create any S3 buckets.

We have also enabled bucket versioning and blocked public access for enhanced security.

When using an EC2 instance with a public IP, we can access s3 buckets via the internet(Given you have a route to the internet gateway from EC2).

If the ec2 instance is not using a public IP, then you can use the VPC gateway endpoint (or VPC interface endpoint, or even a NAT gateway but they both will incur a cost) to access the s3 bucket via VPC private traffic without going through the Internet.

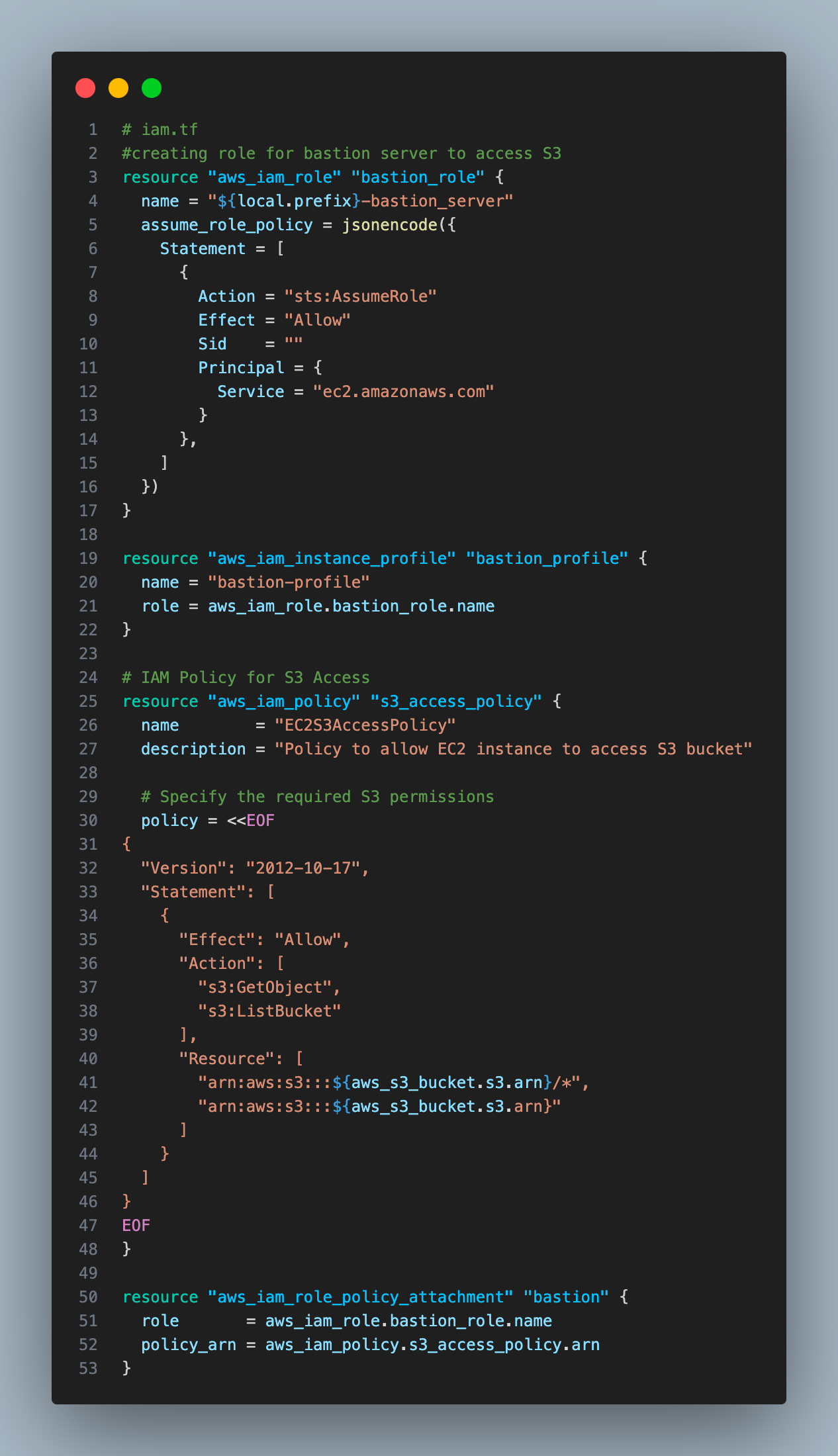

3. Create an IAM role with permission to the S3 bucket.

It will enable ec2 instance access to the s3 bucket. It has 4 steps —

- Create an IAM role

- Create IAM policy

- Attach the IAM policy with the IAM role

- Create an IAM instance profile to allow the EC2 instance to assume the IAM role associated with the instance profile

We have constructed the bucket arns using Terraform string interpolation. It enables you to construct more flexible and dynamic configurations. String interpolation is denoted by ${}.

In AWS, an ARN (Amazon Resource Name) is a unique identifier assigned to AWS resources.

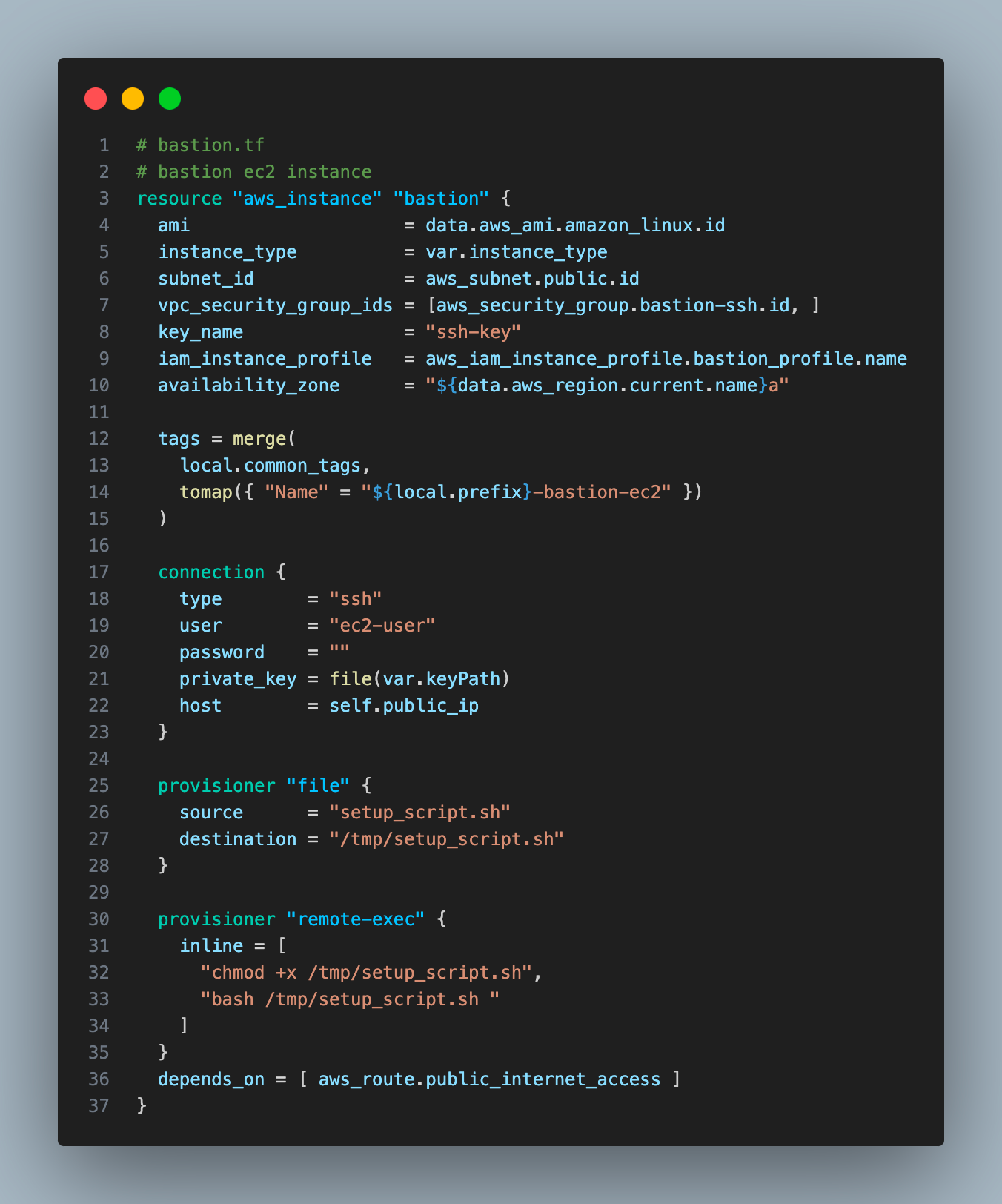

4. Create a bastion Ec2 instance in the public subnet, it will have a public IP automatically assigned to it.

key_name = “ssh-key”will use the SSH key we created to connect to EC2.- I have used provisioners to copy (using file provisioner)the script on the ec2 instance and run the script remotely( using remote-exec provisioned). The script will run after the bastion instance is created and will install AWS CLI.

- To use provisions, we also need to define the connection block with the config that Terraform will use to run provisioners.

type = “ssh” -> SSH connection

user = “ec2-user” -> This is the default user name used by EC2, it is created whenever an EC2 instance is created.

private_key = file(var.keyPath) -> It will use the Terraform file() to give the local path to the SSH key we created and downloaded earlier. This key will allow provisioners to talk to the ec2 instance via SSH.

host = self.public_ip -> It references the public IP of the bastion instance.

A Little About Terraform Provisioners

Terraform provisioners are used to execute scripts or commands on remote resources after resource creation. These are mostly used for automating software installation and other infra-management tasks.

Terraform provides multiple types of provisioners such as —

local-execfor local executionfileto copy files to the remote instanceremote-execfor remote execution on instances.

Now we will deploy the AWS resources using Terraform

Let’s export the access key and secrets to the environment variable

export AWS_ACCESS_KEY_ID="access-key-id"

export AWS_SECRET_ACCESS_KEY="access-key-secret"Let’s create the rest of the config before we run terraform apply to deploy resources.

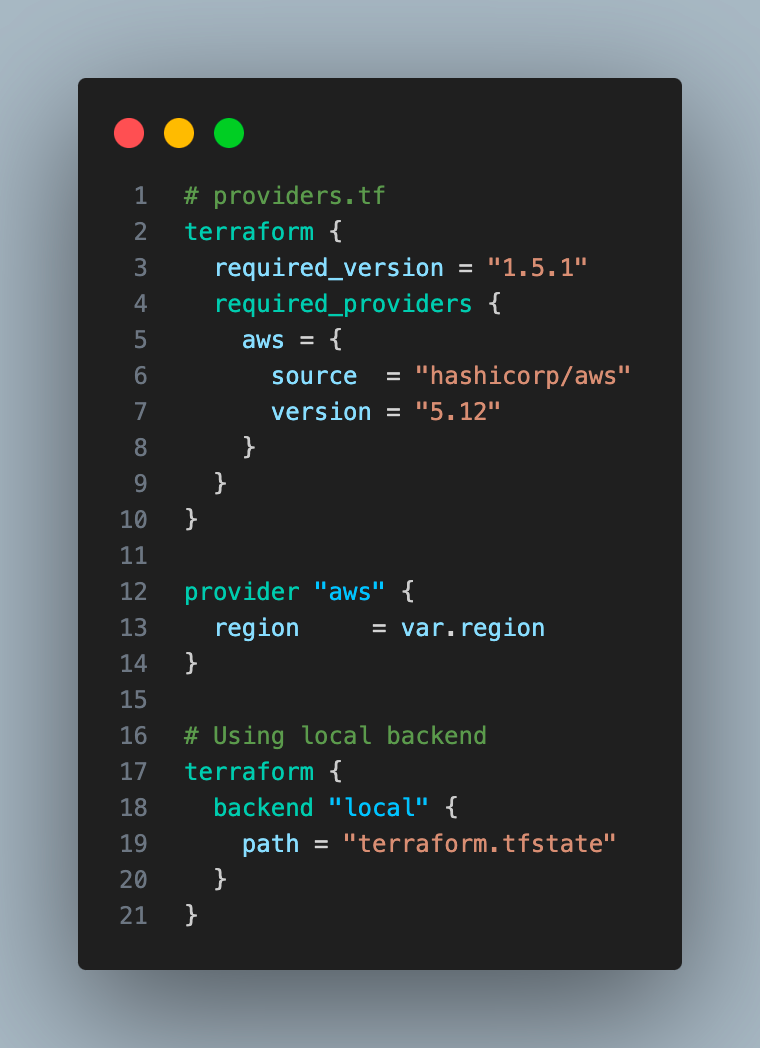

providers.tf

Make sure your Terraform version matches the one in required_version. You can use tfenv/tfswitch to install and use multiple versions of Terraform.

variables.tf

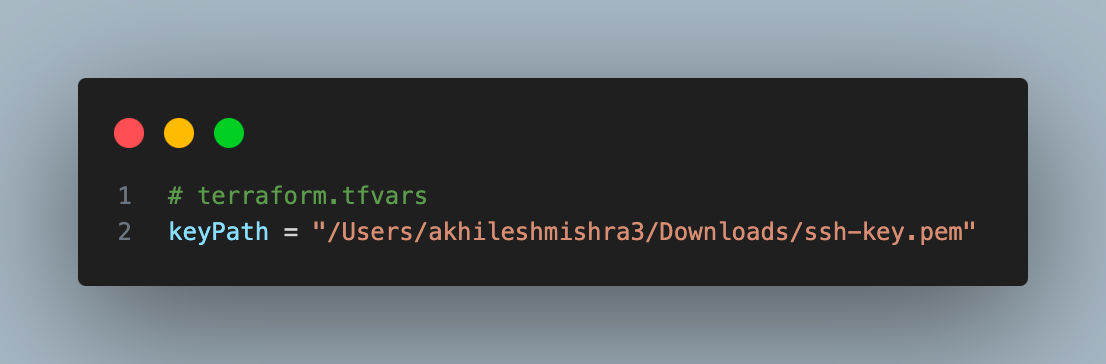

We can use *.tfvars file to store the values of variables.

terraform.tfvars

data.tf

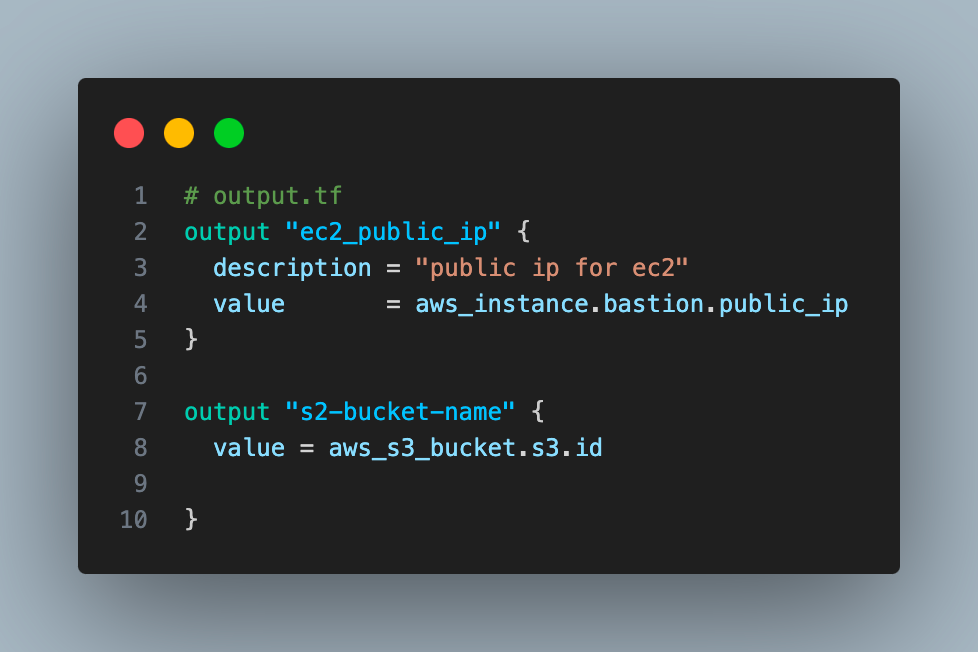

We can also use the Terraform output block to define values that will be exposed to us once the infrastructure is provisioned.

output.tf

Now we can deploy the code. I have kept all the code in the public GitHub repo.

terraform apply

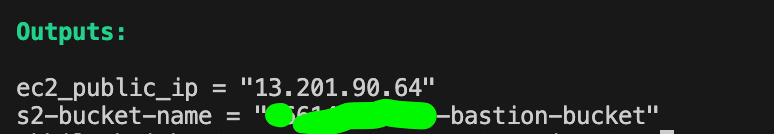

It deploys the resources and returns the IP address of the bastion EC2 instance, and S3 bucket name as defined in the output block.

Note: The public IP attached to the EC2 instance is temporary, i.e. when you restart the EC2 instance, the public IP will change. If you want to have a fixed IP then use elastic IP.

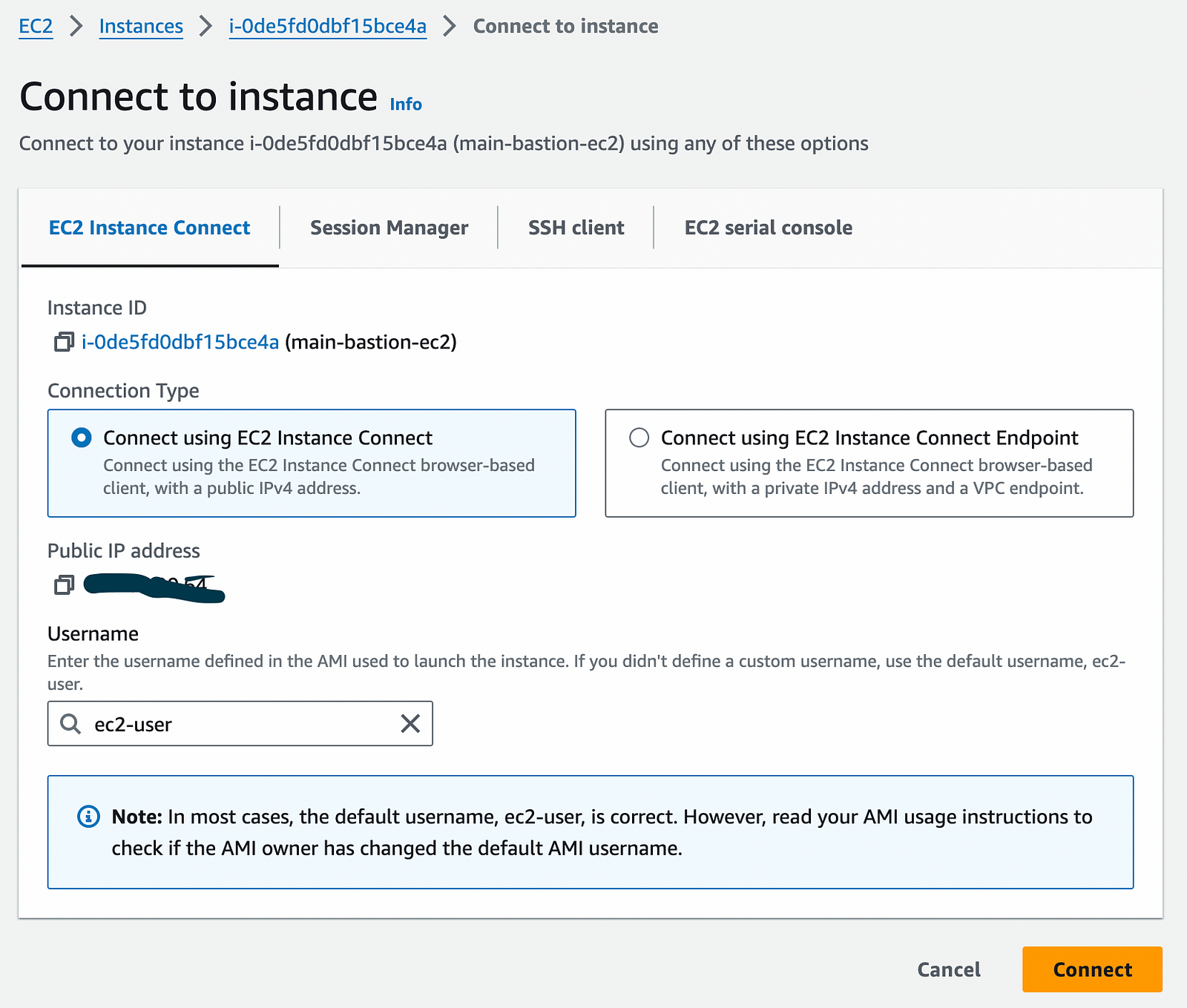

If you head to your AWS console and go to EC2 -> https://ap-south-1.console.aws.amazon.com/ec2/home?region=ap-south-1

You will find the ec2 instance running. You can connect to it via SSH, either from the AWS console or your local machine.

We will log in to this instance from our laptop from the terminal(on Mac) or PowerShell/cmd (on Windows) using the SSH key we created.

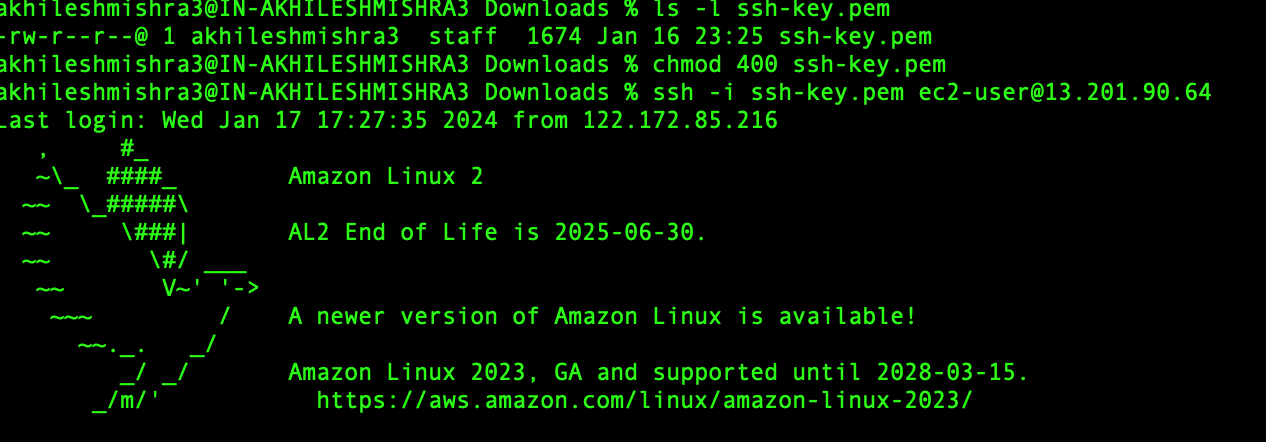

ssh -i <private-key> user-name@public-ip

# ssh -i <private-key> user-name@public-ip

# Go to the path where you stored the key, and make sure you have 400 permission

# otherwise, it will not be able to log you in.

# ssh-key.pem was the key we created and kept in the Download folder

ls -l ssh-key.pem

chmod 400 ssh-key.pem

ssh -i ssh-key.pem ec2-user@13.201.90.64

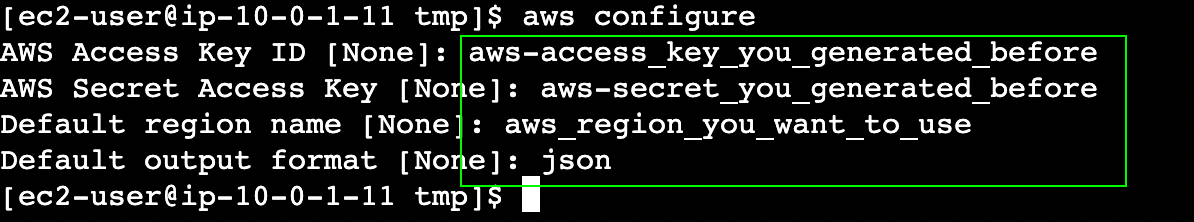

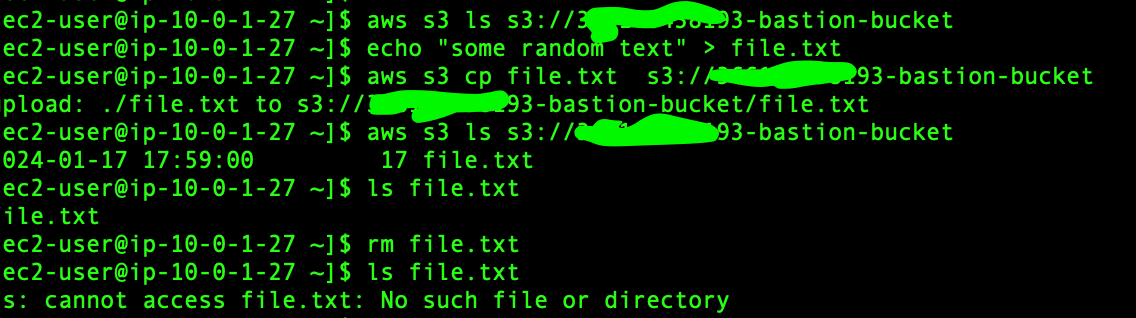

Now we will use AWS CLI to access the s3 buckets, create a file, and upload it to the S3 bucket. Before we can do that, let’s configure the AWS CLI using aws configure command.aws configure

We can use aws s3 CLI to talk with S3.

# list the bucket content

aws s3 ls s3://<bucket-name>

# creating a file with some content

echo "some random text" > file.txt

# Uploading file to the bucket

aws s3 cp file.txt s3://<bucket-name>

# list the bucket content

aws s3 ls s3://<bucket-name>

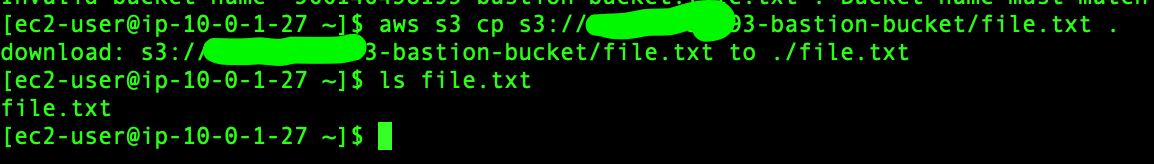

# download from bucket

aws s3 cp s3://<bucket-name>/file-name .

Now we will download the file from the bucket

This is how we can connect with the S3 bucket over the internet with EC2’s public IP.

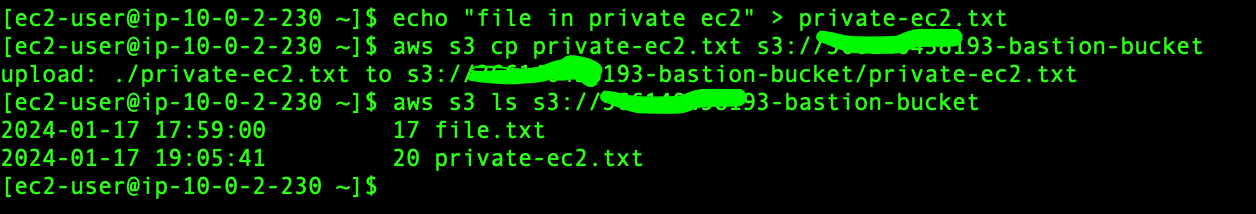

Now we will use the VPC gateway endpoint to connect to the S3 bucket from an EC2 instance(without public IP) in the private subnet.

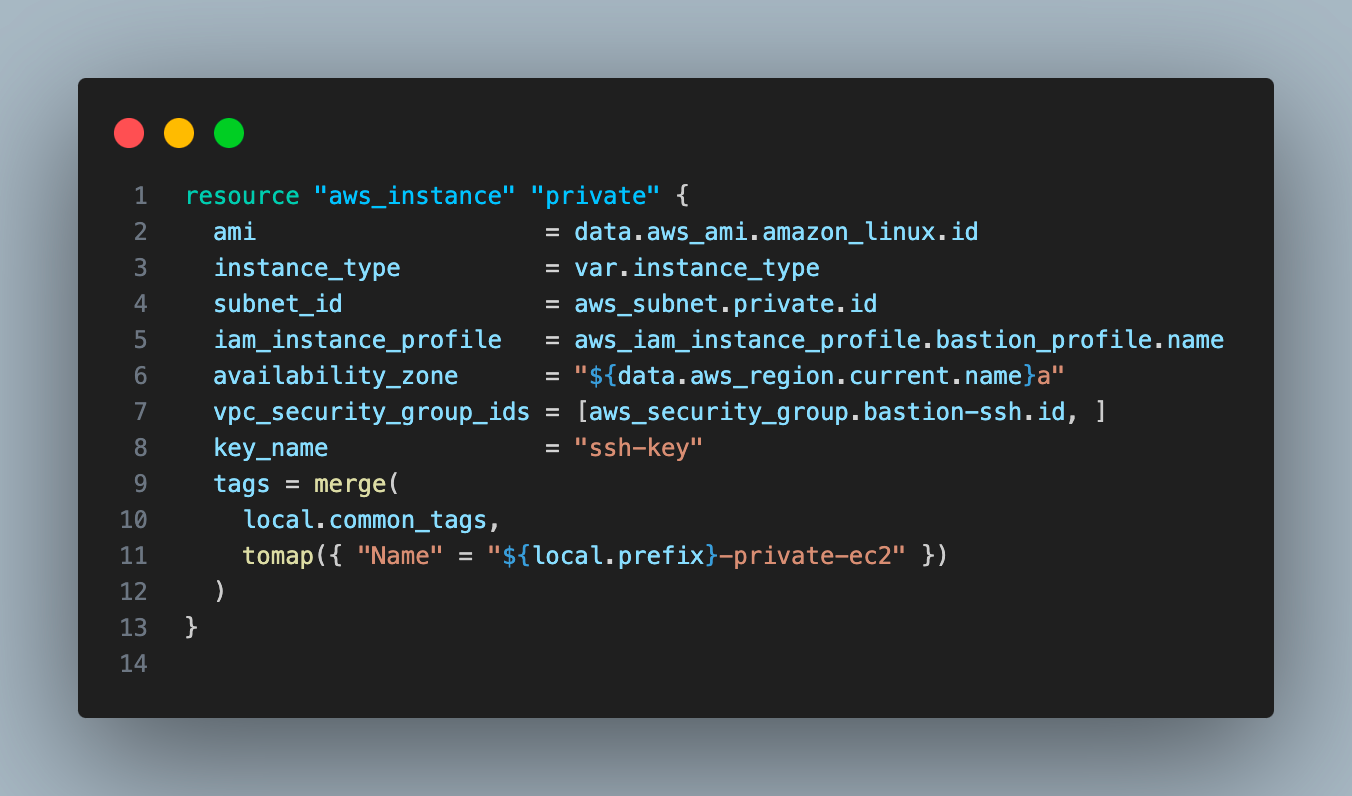

Create an EC2 instance in the private subnet.

We used the same security group, instance profile, and SSH key as the bastion EC2 instance. We will use a user data script to install AWS CLI.

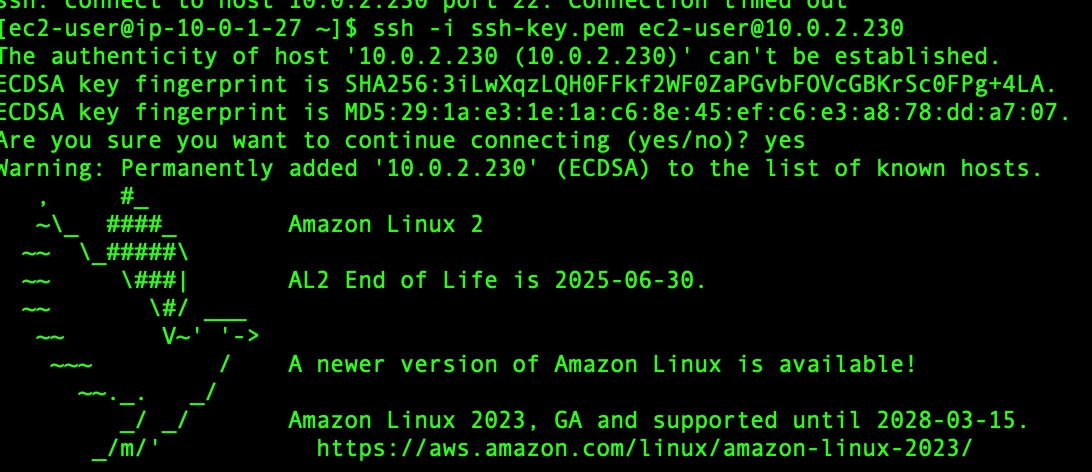

We cannot connect to this directly Instance as it does not have a public IP, instead, we can connect to this via bastion Instance. EC2 instances in the same VPC can communicate with each other with private IP.

# Copy the ssh key to bastion instance

scp -i ssh-key.pem ssh-key.pem ec2-user@13.201.90.64:/home/ec2-user

# Login to bastion insatance

ssh -i ssh-key.pem ec2-user@13.201.90.64

aws configure

Now if you try to access the bucket from the private instance, it will not allow you.

Now we will create a VPC gateway endpoint and it should enable you to talk to the S3 bucket from a private EC2 instance.

Create a VPC gateway endpoint in the VPC and associate it with the route table associated with a private subnet

VPC gateway endpoint?

This AWS resource allows you to access S3 service from VPC with private IP.

- Currently, AWS S3 and DynamoDB are the only services supported by gateway endpoints; for other services you can use the VPC interface endpoint

- It is free, unlike the VPC interface endpoint.

Now, thanks to the VPC gateway endpoint, we can access S3 faster and more securely as we do not have to access it via the internet.

That is all for this blog post. see you on the next one.

I will keep all the code used in this blog post on my Github repo, use the link to access the code.