Learn DevOps with Advanced, real-world projects, live troubleshooting

A complete guide to migrating the RDS Postgres database with Python

Python Automation That Saved Our Client $1000/Month On Cloud Bills

I was working on a project to reduce cloud costs across multiple AWS accounts. We discovered many RDS Postgres databases were over-provisioned, wasting terabytes of storage.

AWS doesn’t let you simply reduce allocated RDS storage. You can’t even restore from a snapshot with less space. To fix this, you need to create a new RDS instance with less storage and restore the database there. It’s not straightforward, but it was necessary for over 100 databases.

To solve this, I wrote a Python script to automate the process. I packaged it as a Docker container, deployed it to ECS, and used a Lambda function to trigger it. This approach allowed us to efficiently right-size our databases and significantly cut down on unnecessary cloud spending.

Automating the AWS RDS Postgres database migration with boto3

Let me show you how to implement a similar solution in your environment. I will use AWS Python SDK boto3 to implement the automation.

As part of the database migration, I will follow the below steps.

- Use boto3 to pull the RDS instance detail and its storage utilization.

- Use the Postgres utility pg_dump to take the backup of the database.

- Duplicate the RDS instance with a new storage size, and name it with

-newsuffix. - Wait for the new database to be available.

- Restore the new database from the database dump taken in the previous step with the pg_restore utility.

- Swap the original database and the duplicated one by renaming them.

- Stop the old DB instance and manually delete it after a few days.

Generating a database usage report

I have created a script and placed it in my GitHub repo. Let me create three Postgres database instances in my AWS account by running AWS CLI commands.

I will use an AWS EC2 Linux VM to run scripts for this blog post.

# Install the AWS CLI on a Linux machine, follow these steps

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip awscliv2.zip

sudo ./aws/install# Create 3 RDS postgres instances.

aws rds create-db-instance \

--db-instance-identifier mydbinstance-1 \

--db-instance-class db.t3.micro \

--engine postgres \

--allocated-storage 50 \

--master-username mymasteruser \

--master-user-password mypassword \

--backup-retention-period 7

aws rds create-db-instance \

--db-instance-identifier mydbinstance-2 \

--db-instance-class db.t3.micro \

--engine postgres \

--allocated-storage 70 \

--master-username mymasteruser \

--master-user-password mypassword \

--backup-retention-period 7

aws rds create-db-instance \

--db-instance-identifier mydbinstance-3 \

--db-instance-class db.t3.micro \

--engine postgres \

--allocated-storage 60 \

--master-username mymasteruser \

--master-user-password mypassword \

--backup-retention-period 7Wait for them to come up and then run the script.

git clone https://github.com/akhileshmishrabiz/python-for-devops

cd python-automations-projects/aws-rds-postgres-migration/

pip install -r requitements.txt

# Run the script

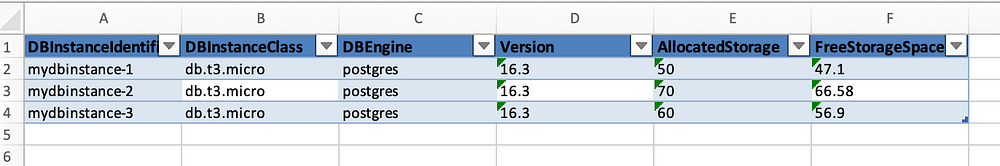

python3 rds_storage_report.pyThis will generate an Excel report, It will look something like this.

This report shows the used and allocated disk space, and it will help you decide if you can save some money by cutting down the storage.

Cleanup

aws rds delete-db-instance \

--db-instance-identifier mydbinstance-1 \

--skip-final-snapshot

aws rds delete-db-instance \

--db-instance-identifier mydbinstance-2 \

--skip-final-snapshot

aws rds delete-db-instance \

--db-instance-identifier mydbinstance-3 \

--skip-final-snapshotMigrating Postgres database to reduce cloud cost

I created the RDS Postgres database instance in the last blog post, Deploying Containers with AWS ECS. I will use the same RDS instant for this blog.

I used the parameter store parameter to store the DB link for all databases. I use the /database/<DB instance ID> format to store the DB link parameter. DB links can be used to access the database. I will create the DB link using the database details below.

postgresql://{db_user}:{db_password}@{db_host_endpoint}:{db_port}/{database_name}

Let’s start building the automation, one function at a time. I will explain each step

Note: I have placed the code used in this blog post in my GitHub repo python-for-devops.

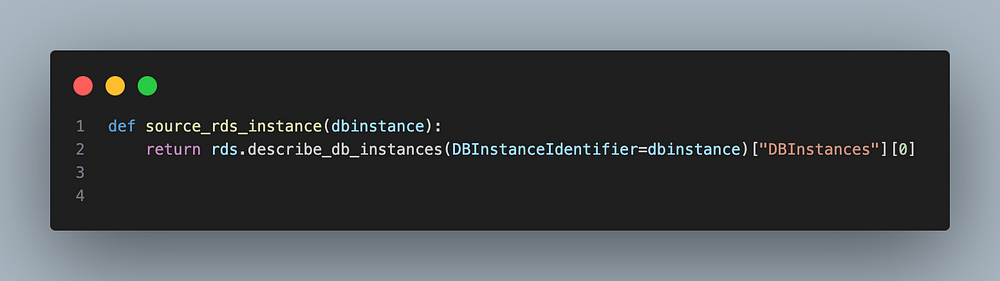

1. Get RDS details of the original DB instance

2. Get the storage utilization for the DB from CloudWatch monitoring

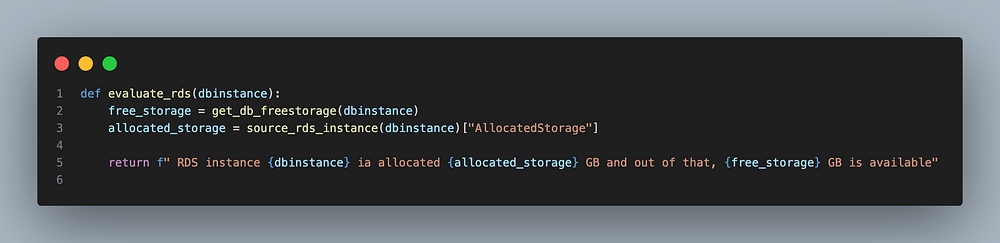

3. Compare the allocated and free disk space

Since we had stored the DB user, password, etc on a parameter store, we will pull that data.

4. DB details from the parameter store

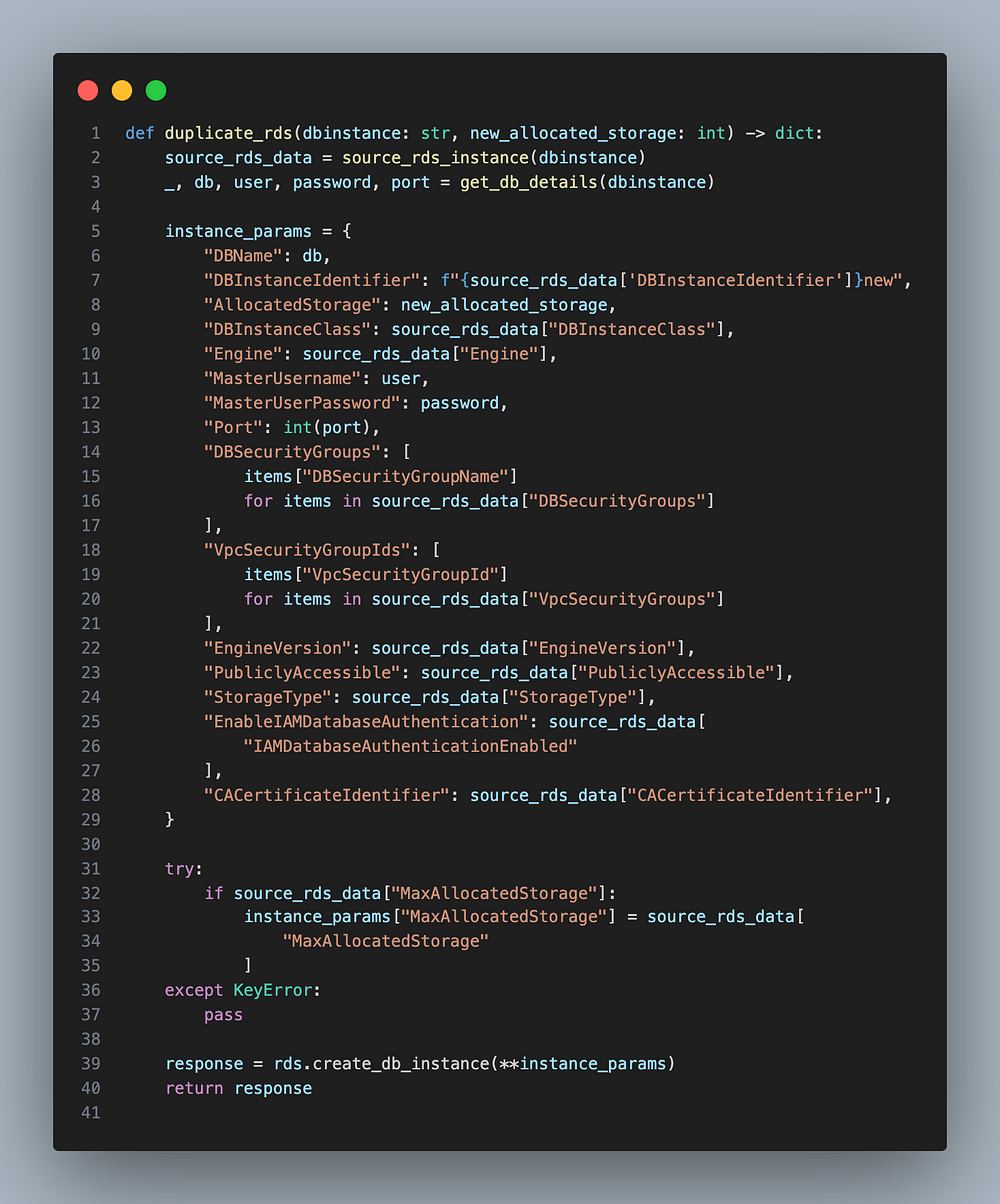

5. Duplicate the RDS instance with the new storage value

This function will duplicate the RDS instance with the new value for allocated disk space. The rest of the values will be the same as the original RDS instance.

Note: If you are using gp2 disks and want to migrate to gp3, you can update that value in the

StorageTypekey.

6. Check if RDS is ready

7. Take the backup of the Postgres database with the pg_dump utility.

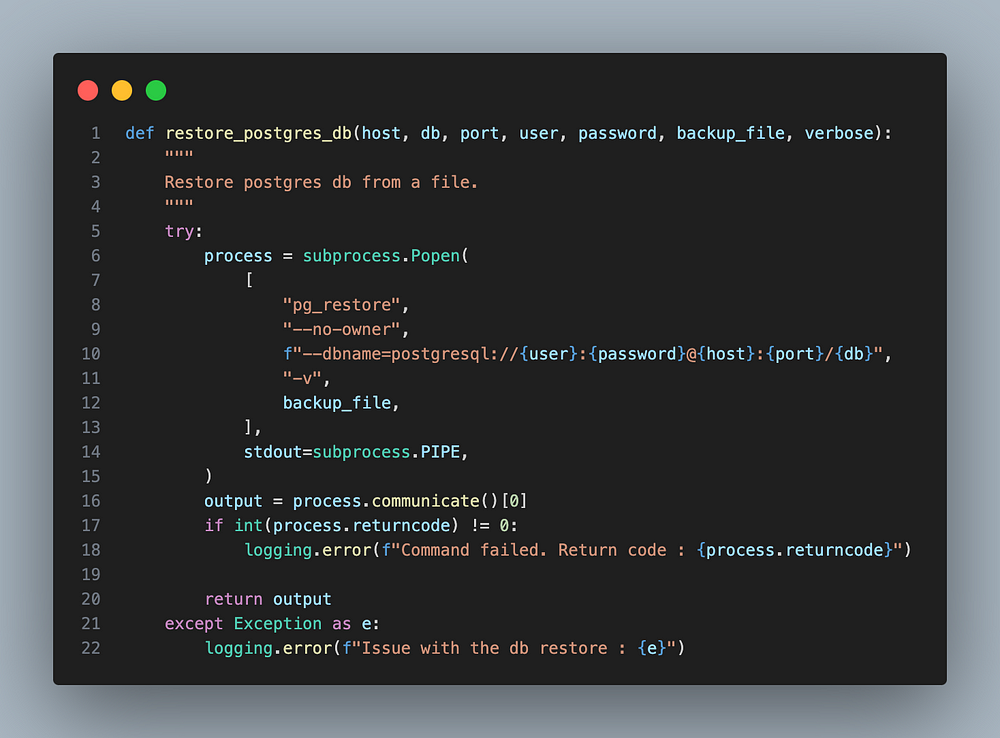

8. Restore the new RDS instance from the backup we took in the last step.

In this example, I used the backup from local storage to restore. You can add additional logic to upload the backup to an S3 bucket after taking a backup and downloading the backup from S3 before restoring if you have a special requirement.

9. Rename the RDS instance

This function will rename the database. I used the sleep from time module to wait instead of boto3 waiter as it is broken.

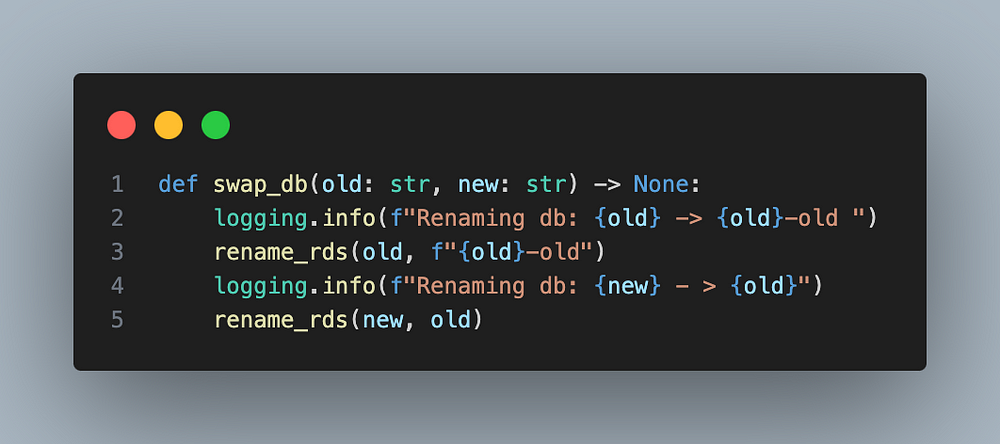

10. Swap the RDS instance.

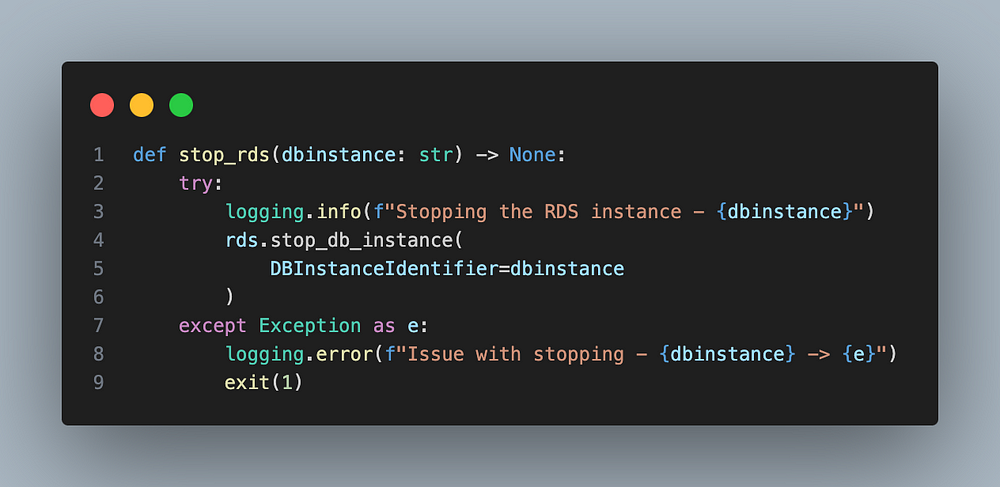

11. Stop the RDS instance

This function will stop the original RDS instance and we will use the new one.

We call all the above functions to migrate the Postgres RDS instance.

Running the script from the EC2 machine

Since I used an RDS instance with a private endpoint( i.e. no public IP to access from outside the network).

I launched an EC2 Ubuntu machine on the same VPC where my RDS instance exists. As my script will be using pg_dump and pg_restore commands for backup/restore, I will also need to install Postgres and other dependencies.

Installing dependencies on the Ubuntu machine.

sudo apt-get update

sudo apt-get install wget ca-certificates

wget --quiet -O - https://www.postgresql.org/media/keys/ACCC4CF8.asc | sudo apt-key add -

sudo sh -c 'echo "deb http://apt.postgresql.org/pub/repos/apt $(lsb_release -cs)-pgdg main" > /etc/apt/sources.list.d/pgdg.list'sudo apt-get update

sudo apt-get install postgresql-client-16

pg_dump --version

psql --version# install pip

apt install python3-pip# install aws cli

sudo apt install awscli -y- The security group attached to the Ubuntu machine allows inbound/outbound connection to RDS on the DB port(5432).

- The security group attached to RDS allows inbound connection on DB port(5432).

- The IAM role attached to EC2 allows relevant permissions. You can attach the admin role if you are testing it.

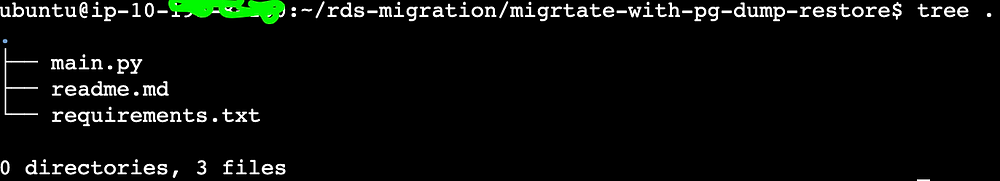

Instructions to run the script

Copy the script to your machine.

git clone https://github.com/akhileshmishrabiz/python-for-devops

cd python-automations-projects/aws-rds-postgres-migration/migrtate-with-pg-dump-restore

ls

Install python dependencies

pip install -r requirements.txtRun the script

# Evaluate the status of 'my-rds-instance-name'

python main.py evaluate <my-rds-instance-name

# Migrate 'my-rds-instance-name' with a new storage size(in GB)

python main.py migrate my-rds-instance-name < Allocated Storage>Final results

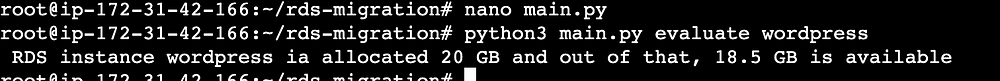

Calculating the storage usage of the RDS instance.

You can get the complete script from my Github Repo.

python3 main.py evaluate wordpress

As you can see most of the allocated storage is not used for this instance. I will reduce the RDS instance to half its size, 10 GB.

python3 main.py migrate wordpress 10

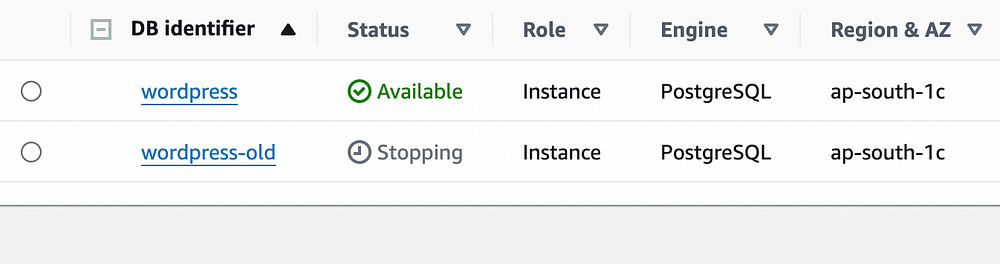

Here is the RDS console output

I have placed the complete code in my public GitHub repo python-for-devops.

Pitfalls

While testing my database migration automation I found that Pg_dump and Pg_restore might take significantly longer for large database migrations. My lead suggested I use, a great tool for live migration for Postgres databases. It was faster as it did not do backup/restore, instead it directly synced both databases.

Final words

I started with pg_dump/pg_restore but ended up not using it for my use case as this approach was slow (for larger databases) and required downtime. I used pgsync, a great tool for live database migrations.

I packed the script as a Docker image and used ECS to deploy the solution and Lambda to trigger the migration. I will write about it in my future blog

About me

I am Akhilesh Mishra, a self-taught Devops engineer with 11+ years working on private and public cloud (GCP & AWS)technologies.

I also mentor DevOps aspirants in their journey to devops by providing guided learning and Mentorship.

Mentorship with Akhilesh on Topmate

Longterm mentorship with Akhilesh on Preplaced

Connect with me on Linkedin: https://www.linkedin.com/in/akhilesh-mishra-0ab886124/